Best Unified API Platforms 2025: A Guide to Scaling SaaS Integrations

Read more

ATS integration is the process of connecting an Applicant Tracking System (ATS) with other applications—such as HRIS, payroll, onboarding, or assessment tools—so data flows seamlessly among them. These ATS API integrations automate tasks that otherwise require manual effort, including updating candidate statuses, transferring applicant details, and generating hiring reports.

If you're just looking to quick start with a specific ATS APP integration, you can find APP specific guides and resources in our ATS API Guides Directory

Today, ATS integrations are transforming recruitment by simplifying and automating workflows for both internal operations and customer-facing processes. Whether you’re building a software product that needs to integrate with your customers’ ATS platforms or simply improving your internal recruiting pipeline, understanding how ATS integrations work is crucial to delivering a better hiring experience.

Hiring the right talent is fundamental to building a high-performing organization. However, recruitment is complex and involves multiple touchpoints—from sourcing and screening to final offer acceptance. By leveraging ATS integration, organizations can:

Fun Fact: According to reports, 78% of recruiters who use an ATS report improved efficiency in the hiring process.

To develop or leverage ATS integrations effectively, you need to understand key Applicant Tracking System data models and concepts. Many ATS providers maintain similar objects, though exact naming can vary:

As a unified API for ATS integration, Knit uses consolidated concepts for ATS data. Examples include:

These standardized data models ensure consistent data flow across different ATS platforms, reducing the complexities of varied naming conventions or schemas.

By automatically updating candidate information across portals, you can expedite how quickly candidates move to the next stage. Ultimately, ATS integration leads to fewer delays, faster time-to-hire, and a lower risk of losing top talent to slow processes.

Learn more: Automate Recruitment Workflows with ATS API

Connecting an ATS to onboarding platforms (e.g., e-signature or document-verification apps) speeds up the process of getting new hires set up. Automated provisioning tasks—like granting software access or licenses—ensure that employees are productive from Day One.

Manual data entry is prone to mistakes—like a single-digit error in a salary offer that can cost both time and goodwill. ATS integrations largely eliminate these errors by automating data transfers, ensuring accuracy and minimizing disruptions to the hiring lifecycle.

Comprehensive, up-to-date recruiting data is essential for tracking trends like time-to-hire, cost-per-hire, and candidate conversion rates. By syncing ATS data with other HR and analytics platforms in real time, organizations gain clearer insights into workforce needs.

Automations free recruiters to focus on strategic tasks like engaging top talent, while candidates receive faster responses and smoother interactions. Overall, ATS integration raises satisfaction for every stakeholder in the hiring pipeline.

Below are some everyday ways organizations and software platforms rely on ATS integrations to streamline hiring:

Applicant Tracking Systems vary in depth and breadth. Some are designed for enterprises, while others cater to smaller businesses. Here are a few categories commonly integrated via APIs:

Below are some common nuances and quirks of some popular ATS APIs

When deciding which ATS APIs to integrate, consider:

While integrating with an ATS can deliver enormous benefits, it’s not always straightforward:

By incorporating these best practices, you’ll set a solid foundation for smooth ATS integration:

Learn More: Whitepaper: The Unified API Approach to Building Product Integrations

┌────────────────────┐ ┌────────────────────┐

│ Recruiting SaaS │ │ ATS Platform │

│ - Candidate Mgmt │ │ - Job Listings │

│ - UI for Jobs │ │ - Application Data │

└────────┬───────────┘ └─────────┬──────────┘

│ 1. Fetch Jobs/Sync Apps │

│ 2. Display Jobs in UI │

▼ 3. Push Candidate Data │

┌─────────────────────┐ ┌─────────────────────┐

│ Integration Layer │ ----->│ ATS API (OAuth/Auth)│

│ (Unified API / Knit)│ └─────────────────────┘

└─────────────────────┘

Knit is a unified ATS API platform that allows you to connect with multiple ATS tools through a single API. Rather than managing individual authentication, communication protocols, and data transformations for each ATS, Knit centralizes all these complexities.

Learn more: Getting started with Knit

Building ATS integrations in-house (direct connectors) requires deep domain expertise, ongoing maintenance, and repeated data normalization. Here’s a quick overview of when to choose each path:

Security is paramount when handling sensitive candidate data. Mistakes can lead to data breaches, compliance issues, and reputational harm.

Knit’s Approach to Data Security

Q1. How do I know which ATS platforms to integrate first?

Start by surveying your customer base or evaluating internal usage patterns. Integrate the ATS solutions most common among your users.

Q2. Is in-house development ever better than using a unified API?

If you only need a single ATS and have a highly specialized use case, in-house could work. But for multiple connectors, a unified API is usually faster and cheaper.

Q3. Can I customize data fields that aren’t covered by the common data model?

Yes. Unified APIs (including Knit) often offer pass-through or custom field support to accommodate non-standard data requirements.

Q4. Does ATS integration require specialized developers?

While knowledge of REST/SOAP/GraphQL helps, a unified API can abstract much of that complexity, making it easier for generalist developers to implement.

Q5. What about ongoing maintenance once integrations are live?

Plan for version changes, rate-limit updates, and new data objects. A robust unified API provider handles much of this behind the scenes.

Q6.Do ATS integrations require a partnership with each individual ATS

Most platforms don't require a partnership to work with their open APIs, however some of them might have restricted use cases / APIs that require partner IDs to access. Our team of experts could guide you on how to navigate this.

ATS integration is at the core of modern recruiting. By connecting your ATS to the right tools—HRIS, onboarding, background checks—you can reduce hiring time, eliminate data errors, and create a streamlined experience for everyone involved. While building multiple in-house connectors is an option, using a unified API like Knit offers an accelerated route to connecting with major ATS platforms, saving you development time and costs.

ATS integration is at the core of modern recruiting. By connecting your ATS to the right tools—HRIS, onboarding, background checks—you can reduce hiring time, eliminate data errors, and create a streamlined experience for everyone involved. While building multiple in-house connectors is an option, using a unified API like Knit offers an accelerated route to connecting with major ATS platforms, saving you development time and costs.

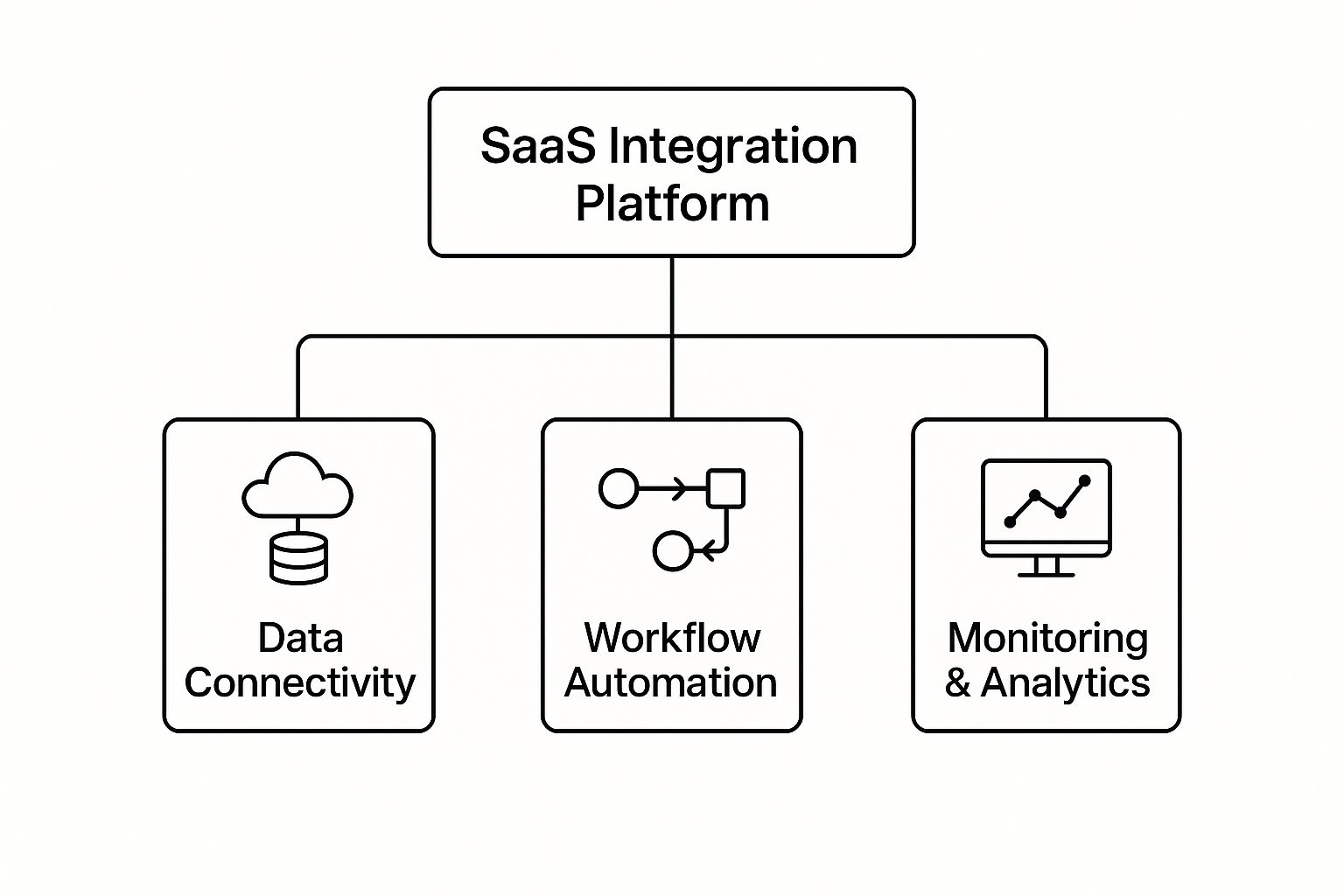

In 2025, the "build vs. buy" debate for SaaS integrations is effectively settled. With the average enterprise now managing over 350+ SaaS applications, engineering teams no longer have the bandwidth to build and maintain dozens of 1:1 connectors.

When evaluating your SaaS integration strategy, the decision to move to a unified model is driven by the State of SaaS Integration trends we see this year: a shift toward real-time data, AI-native infrastructure, and stricter "zero-storage" security requirements.

In this guide, we break down the best unified API platforms in 2025, categorized by their architectural strengths and ideal use cases.

A Unified API is an abstraction layer that aggregates multiple APIs from a single category into one standardized interface. Instead of writing custom code for Salesforce, HubSpot, and Pipedrive, your developers write code for one "Unified CRM API."

While we previously covered the 14 Best SaaS Integration Platforms, 2025 has seen a massive surge specifically toward Unified APIs for CRM, HRIS, and Accounting because they offer a higher ROI by reducing maintenance by up to 80%.

Knit has emerged as the go-to for teams that refuse to compromise on security and speed. While "First Gen" unified APIs often store a copy of your customer’s data, Knit’s zero-storage architecture ensures data only flows through - it is never stored at rest.

Merge remains a heavyweight, known for its massive library of integrations across HRIS, CRM, ATS, and more. If your goal is to "check the box" on 50+ integrations as fast as possible, Merge is a good choice

Nango caters to the "code-first" crowd. Unlike pre built unified APIs, Nango gives developers tools to build those and offers control through a code-based environment.

If your target market is the EU, Kombo offers great coverage. They offer deep, localized support for fragmented European platforms

Apideck is unique because it helps you "show" your integrations as much as "build" them. It’s designed for companies that want a public-facing plug play marketplace.

If you are evaluating a specific provider within these unified categories, explore our deep-dive directories:

In 2025, your choice of Unified API is a strategic infrastructure decision.

Ready to simplify your integration roadmap?

Sign up for Knit for free or Book a demo to see how we’re powering the next generation of real-time, secure SaaS integrations.

.png)

The Model Context Protocol (MCP) is revolutionizing the way AI agents interact with external systems, services, and data. By following a client-server model, MCP bridges the gap between static AI capabilities and the dynamic digital ecosystems they must work within. In previous posts, we’ve explored the basics of how MCP operates and the types of problems it solves. Now, let’s take a deep dive into the core components that make MCP so powerful: Tools, Resources, and Prompts.

Each of these components plays a unique role in enabling intelligent, contextual, and secure AI-driven workflows. Whether you're building AI assistants, integrating intelligent agents into enterprise systems, or experimenting with multimodal interfaces, understanding these MCP elements is essential.

In the world of MCP, Tools are action enablers. Think of them as verbs that allow an AI model to move beyond generating static responses. Tools empower models to call external services, interact with APIs, trigger business logic, or even manipulate real-time data. These tools are not part of the model itself but are defined and managed by an MCP server, making the model more dynamic and adaptable.

Tools help AI transcend its traditional boundaries by integrating with real-world systems and applications, such as messaging platforms, databases, calendars, web services, or cloud infrastructure.

An MCP server advertises a set of available tools, each described in a structured format. Tool metadata typically includes:

When the AI model decides that a tool should be invoked, it sends a call_tool request containing the tool name and the required parameters. The MCP server then executes the tool’s logic and returns either the output or an error message.

Tools are central to bridging model intelligence with real-world action. They allow AI to:

To ensure your tools are robust, safe, and model-friendly:

Security Considerations

Ensuring tools are secure is crucial for preventing misuse and maintaining trust in AI-assisted environments.

Testing Tools: Ensuring Reliability and Resilience

Effective testing is key to ensuring tools function as expected and don’t introduce vulnerabilities or instability into the MCP environment.

If Tools are the verbs of the Model Context Protocol (MCP), then Resources are the nouns. They represent structured data elements exposed to the AI system, enabling it to understand and reason about its current environment.

Resources provide critical context—, whether it’s a configuration file, user profile, or a live sensor reading. They bridge the gap between static model knowledge and dynamic, real-time inputs from the outside world. By accessing these resources, the AI gains situational awareness, enabling more relevant, adaptive, and informed responses.

Unlike Tools, which the AI uses to perform actions, Resources are passively made available to the AI by the host environment. These can be queried or referenced as needed, forming the informational backbone of many AI-powered workflows.

Resources are usually identified by URIs (Uniform Resource Identifiers) and can contain either text or binary content. This flexible format ensures that a wide variety of real-world data types can be seamlessly integrated into AI workflows.

Text resources are UTF-8 encoded and well-suited for structured or human-readable data. Common examples include:

Binary resources are base64-encoded to ensure safe and consistent handling of non-textual content. These are used for:

Below are typical resource identifiers that might be encountered in an MCP-integrated environment:

Resources are passively exposed to the AI by the host application or server, based on the current user context, application state, or interaction flow. The AI does not request them actively; instead, they are made available at the right moment for reference.

For example, while viewing an email, the body of the message might be made available as a resource (e.g., mail://current/message). The AI can then summarize it, identify action items, or generate a relevant response, all without needing the user to paste the content into a prompt.

This separation of data (Resources) and actions (Tools) ensures clean, modular interaction patterns and enables AI systems to operate in a more secure, predictable, and efficient manner.

Prompts are predefined templates, instructions, or interface-integrated commands that guide how users or the AI system interact with tools and resources. They serve as structured input mechanisms that encode best practices, common workflows, and reusable queries.

In essence, prompts act as a communication layer between the user, the AI, and the underlying system capabilities. They eliminate ambiguity, ensure consistency, and allow for efficient and intuitive task execution. Whether embedded in a user interface or used internally by the AI, prompts are the scaffolding that organizes how AI functionality is activated in context.

Prompts can take the form of:

By formalizing interaction patterns, prompts help translate user intent into structured operations, unlocking the AI's potential in a way that is transparent, repeatable, and accessible.

Here are a few illustrative examples of prompts used in real-world AI applications:

These prompts can be either static templates with editable fields or dynamically generated based on user activity, current context, or exposed resources.

Just like tools and resources, prompts are advertised by the MCP (Model Context Protocol) server. They are made available to both the user interface and the AI agent, depending on the use case.

Prompts often contain placeholders, such as {resource_uri}, {date_range}, or {user_intent}, which are filled dynamically at runtime. These values can be derived from user input, current application context, or metadata from exposed resources.

Prompts offer several key advantages in making AI interactions more useful, scalable, and reliable:

When designing and implementing prompts, consider the following best practices to ensure robustness and usability:

Prompts, like any user-facing or dynamic interface element, must be implemented with care to ensure secure and responsible usage:

Imagine a business analytics dashboard integrated with MCP. A prompt such as:

“Generate a sales summary for {region} between {start_date} and {end_date}.”

…can be presented to the user in the UI, pre-filled with defaults or values pulled from recent activity. Once the user selects the inputs, the AI fetches relevant data (via resources like db://sales/records) and invokes a tool (e.g., a report generator) to compile a summary. The prompt acts as the orchestration layer tying these components together in a seamless interaction.

While Tools, Resources, and Prompts are each valuable as standalone constructs, their true potential emerges when they operate in harmony. When thoughtfully integrated, these components form a cohesive, dynamic system that empowers AI agents to perform meaningful tasks, adapt to user intent, and deliver high-value outcomes with precision and context-awareness.

This trio transforms AI from a passive respondent into a proactive collaborator, one that not only understands what needs to be done, but knows how, when, and with what data to do it.

To understand this synergy, let’s walk through a typical workflow where an AI assistant is helping a business user analyze sales trends:

This multi-layered interaction model allows the AI to function with clarity and control:

The result is an AI system that is:

This framework scales elegantly across domains, enabling complex workflows in enterprise environments, developer platforms, customer service, education, healthcare, and beyond.

The Model Context Protocol (MCP) is not just a communication mechanism—it is an architectural philosophy for integrating intelligence across software ecosystems. By rigorously defining and interconnecting Tools, Resources, and Prompts, MCP lays the groundwork for AI systems that are:

See how these components are used in practice:

1. How do Tools and Resources complement each other in MCP?

Tools perform actions (e.g., querying a database), while Resources provide the data context (e.g., the query result). Together they enable workflows that are both action-driven and data-grounded.

2. What’s the difference between invoking a Tool and referencing a Resource?

Invoking a Tool is an active request (using tools/call), while referencing a Resource is passive, the AI can access it when made available without explicitly requesting execution.

3. Why are JSON Schemas critical for Tool inputs?

Schemas prevent misuse by enforcing strict formats, ensuring the AI provides valid parameters, and reducing the risk of injection or malformed requests.

4. How can binary Resources (like images or PDFs) be used effectively?

Binary Resources, encoded in base64, can be referenced for tasks like summarizing a report, extracting data from a PDF, or analyzing image inputs.

5. What safeguards are needed when exposing Resources to AI agents?

Developers should sanitize URIs, apply access controls, and minimize exposure of sensitive binary data to prevent leakage or unauthorized access.

6. How do Prompts reduce ambiguity in AI interactions?

Prompts provide structured templates (with placeholders like {resource_uri}), guiding the AI’s reasoning and ensuring consistent execution across workflows.

7. Can Prompts dynamically adapt based on available Resources?

Yes. Prompts can auto-populate fields with context (e.g., a current email body or log file), making AI responses more relevant and personalized.

8. What testing strategies apply specifically to Tools?

Alongside functional testing, Tools require integration tests with MCP servers and backend systems to validate latency, schema handling, and error resilience.

9. How do Tools, Resources, and Prompts work together in a layered workflow?

A Prompt structures intent, a Tool executes the operation, and a Resource provides or captures the data—creating a modular interaction loop.

10. What’s an example of misuse if these elements aren’t implemented carefully?

Without input validation, a Tool could execute a harmful command; without URI checks, a Resource might expose sensitive files; without guardrails, Prompts could be manipulated to trigger unsafe operations.

.png)

The Model Context Protocol (MCP) started with a simple yet powerful goal: to create a simple yet powerful interface standard, aimed at letting AI agents invoke tools and external APIs in a consistent manner. But the true potential of MCP goes far beyond just calling a calculator or querying a database. It serves as a critical foundation for orchestrating complex, modular, and intelligent agent systems where multiple AI agents can collaborate, delegate, chain operations, and operate with contextual awareness across diverse tasks.

Suggested reading: Scaling AI Capabilities: Using Multiple MCP Servers with One Agent

In this blog, we dive deep into the advanced integration patterns that MCP unlocks for multi-agent systems. From structured handoffs between agents to dynamic chaining and even complex agent graph topologies, MCP serves as the "glue" that enables these interactions to be seamless, interoperable, and scalable.

At its core, an advanced integration in MCP refers to designing intelligent workflows that go beyond single agent-to-server interactions. Instead, these integrations involve:

Multi-agent orchestration is the process of coordinating multiple intelligent agents to collectively perform tasks that exceed the capability or specialization of a single agent. These agents might each possess specific skills, some may draft content, others may analyze legal compliance, while another might optimize pricing models.

MCP enables such orchestration by standardizing the interfaces between agents and exposing each agent's functionality as if it were a callable tool. This plug-and-play architecture leads to highly modular and reusable agent systems. Here are a few advanced integration patterns where MCP plays a crucial role:

Think of a general-purpose AI agent acting as a project manager. Rather than doing everything itself, it delegates sub-tasks to more specialized agents based on domain expertise—mirroring how human teams operate.

For instance:

This pattern mirrors the division of labor in organizations and is crucial for scalability and maintainability.

MCP allows the parent agent to invoke any sub-agent using a standardized interface. When the ContentManagerAgent calls generate_script(topic), it doesn’t need to know how the script is written, it just trusts the ScriptWriterAgent to handle it. MCP acts as the “middleware,” allowing:

Each sub-agent effectively behaves like a callable microservice.

Example Flow:

ProjectManagerAgent receives the task: "Create a digital campaign for a new fitness app."

Steps:

Each agent is called via MCP and returns structured outputs to the primary agent, which then integrates them.

In a pipeline pattern, agents are arranged in a linear sequence, each one performing a task, transforming the data, and passing it on to the next agent. Think of this like an AI-powered assembly line.

Let’s say you’re building a content automation pipeline for a SaaS company.

Pipeline:

Each stage is executed sequentially or conditionally, with the MCP orchestrator managing the flow.

MCP ensures each stage adheres to a common interface:

Some problems require non-linear workflows—where agents form a graph instead of a simple chain. In these topologies:

Agents:

Workflow:

Let’s walk through a real-world scenario combining handoffs, chaining, and agent graphs:

Step-by-Step:

At each stage, agents communicate using MCP, and each tool call is standardized, logged, and independently maintainable.

Read also: Why MCP Matters: Unlocking Interoperable and Context-Aware AI Agents

Multi-agent systems, especially in regulated domains like healthcare, finance, and legal tech, need granular control and transparency. Here’s how MCP helps:

In a world where AI systems are becoming modular, distributed, and task-specialized, MCP plays an increasingly crucial role. It abstracts complexity, ensures consistency, and enables the kind of agent-to-agent collaboration that will define the next era of AI workflows.

Whether you're building content pipelines, compliance engines, scientific research chains, or human-in-the-loop decision systems, MCP helps you scale reliably and flexibly.

By making tools and agents callable, composable, and context-aware, MCP is not just a protocol, it’s an enabler of next-gen AI systems.

1. Is MCP an orchestration engine that can manage agent workflows directly?

No. MCP is not an orchestration engine in itself, it’s a protocol layer. Think of it as the execution and interoperability backbone that allows agents to communicate in a standardized way. The orchestration logic (i.e., deciding what to do next) must come from a planner, rule engine, or LLM-based controller like LangGraph, Autogen, or a custom framework. MCP ensures that, once a decision is made, the actual tool or agent execution is reliable, traceable, and context-aware.

2. What’s the advantage of using MCP over direct API calls or hardcoded integrations between agents?

Direct integrations are brittle and hard to scale. Without MCP, you’d need to manage multiple formats, inconsistent error handling, and tightly coupled workflows. MCP introduces a uniform interface where every agent or tool behaves like a plug-and-play module. This decouples planning from execution, enables composability, and dramatically improves observability, maintainability, and reuse across workflows.

3. How does MCP enable dynamic handoffs between agents in real-time workflows?

MCP supports context-passing, metadata tagging, and invocation semantics that allow an agent to call another agent as if it were just another tool. This means Agent A can initiate a task, receive partial or complete results from Agent B, and then proceed or escalate based on the outcome. These handoffs are tracked with workflow IDs and can include task-specific context like user profiles, conversation history, or regulatory constraints.

4. Can MCP support workflows with branching, parallelism, or dynamic graph structures?

Yes. While MCP doesn’t orchestrate the branching logic itself, it supports complex topologies through its flexible invocation model. An orchestrator can define a graph where multiple agents are invoked in parallel, with results aggregated or routed dynamically based on responses. MCP’s standardized input/output formats and session management features make such branching reliable and traceable.

5. How is state or context managed when chaining multiple agents using MCP?

Context management is critical in multi-agent systems, and MCP allows you to pass structured context as metadata or part of the input payload. This might include prior tool outputs, session IDs, user-specific data, or policy flags. However, long-term or persistent state must be managed externally, either by the orchestrator or a dedicated memory store. MCP ensures the transport and enforcement of context but doesn’t maintain state across sessions by itself.

6. How does MCP handle errors and partial failures during multi-agent orchestration?

MCP defines a structured error schema, including error codes, messages, and suggested resolution paths. When a tool or agent fails, this structured response allows the orchestrator to take intelligent actions, such as retrying the same agent, switching to a fallback agent, or alerting a human operator. Because every call is traceable and logged, debugging failures across agent chains becomes much more manageable.

7. Is it possible to audit, trace, or monitor agent-to-agent calls in an MCP-based system?

Absolutely. One of MCP’s core strengths is observability. Every invocation, successful or not, is logged with timestamps, input/output payloads, agent identifiers, and workflow context. This is critical for debugging, compliance (e.g., in finance or healthcare), and optimizing workflows. Some MCP implementations even support integration with observability stacks like OpenTelemetry or custom logging dashboards.

8. Can MCP be used in human-in-the-loop workflows where humans co-exist with agents?

Yes. MCP can integrate tools that involve human decision-makers as callable components. For example, a review_draft(agent_output) tool might route the result to a human for validation before proceeding. Because humans can be modeled as tools in the MCP schema (with asynchronous responses), the handoff and reintegration of their inputs remain seamless in the broader agent graph.

9. Are there best practices for designing agents to be MCP-compatible in orchestrated systems?

Yes. Ideally, agents should be stateless (or accept externalized state), follow clearly defined input/output schemas (typically JSON), return consistent error codes, and expose a set of callable functions with well-defined responsibilities. Keeping agent functions atomic and predictable allows them to be chained, reused, and composed into larger workflows more effectively. Versioning tool specs and documenting side effects is also crucial for long-term maintainability.

.png)

In today's AI-driven world, AI agents have become transformative tools, capable of executing tasks with unparalleled speed, precision, and adaptability. From automating mundane processes to providing hyper-personalized customer experiences, these agents are reshaping the way businesses function and how users engage with technology. However, their true potential lies beyond standalone functionalities—they thrive when integrated seamlessly with diverse systems, data sources, and applications.

This integration is not merely about connectivity; it’s about enabling AI agents to access, process, and act on real-time information across complex environments. Whether pulling data from enterprise CRMs, analyzing unstructured documents, or triggering workflows in third-party platforms, integration equips AI agents to become more context-aware, action-oriented, and capable of delivering measurable value.

This article explores how seamless integrations unlock the full potential of AI agents, the best practices to ensure success, and the challenges that organizations must overcome to achieve seamless and impactful integration.

The rise of Artificial Intelligence (AI) agents marks a transformative shift in how we interact with technology. AI agents are intelligent software entities capable of performing tasks autonomously, mimicking human behavior, and adapting to new scenarios without explicit human intervention. From chatbots resolving customer queries to sophisticated virtual assistants managing complex workflows, these agents are becoming integral across industries.

This rise of use of AI agents has been attributed to factors like:

AI agents are more than just software programs; they are intelligent systems capable of executing tasks autonomously by mimicking human-like reasoning, learning, and adaptability. Their functionality is built on two foundational pillars:

For optimal performance, AI agents require deep contextual understanding. This extends beyond familiarity with a product or service to include insights into customer pain points, historical interactions, and updates in knowledge. However, to equip AI agents with this contextual knowledge, it is important to provide them access to a centralized knowledge base or data lake, often scattered across multiple systems, applications, and formats. This ensures they are working with the most relevant and up-to-date information. Furthermore, they need access to all new information, such as product updates, evolving customer requirements, or changes in business processes, ensuring that their outputs remain relevant and accurate.

For instance, an AI agent assisting a sales team must have access to CRM data, historical conversations, pricing details, and product catalogs to provide actionable insights during a customer interaction.

AI agents’ value lies not only in their ability to comprehend but also to act. For instance, AI agents can perform activities such as updating CRM records after a sales call, generating invoices, or creating tasks in project management tools based on user input or triggers. Similarly, AI agents can initiate complex workflows, such as escalating support tickets, scheduling appointments, or launching marketing campaigns. However, this requires seamless connectivity across different applications to facilitate action.

For example, an AI agent managing customer support could resolve queries by pulling answers from a knowledge base and, if necessary, escalating unresolved issues to a human representative with full context.

The capabilities of AI agents are undeniably remarkable. However, their true potential can only be realized when they seamlessly access contextual knowledge and take informed actions across a wide array of applications. This is where integrations play a pivotal role, serving as the key to bridging gaps and unlocking the full power of AI agents.

The effectiveness of an AI agent is directly tied to its ability to access and utilize data stored across diverse platforms. This is where integrations shine, acting as conduits that connect the AI agent to the wealth of information scattered across different systems. These data sources fall into several broad categories, each contributing uniquely to the agent's capabilities:

Platforms like databases, Customer Relationship Management (CRM) systems (e.g., Salesforce, HubSpot), and Enterprise Resource Planning (ERP) tools house structured data—clean, organized, and easily queryable. For example, CRM integrations allow AI agents to retrieve customer contact details, sales pipelines, and interaction histories, which they can use to personalize customer interactions or automate follow-ups.

The majority of organizational knowledge exists in unstructured formats, such as PDFs, Word documents, emails, and collaborative platforms like Notion or Confluence. Cloud storage systems like Google Drive and Dropbox add another layer of complexity, storing files without predefined schemas. Integrating with these systems allows AI agents to extract key insights from meeting notes, onboarding manuals, or research reports. For instance, an AI assistant integrated with Google Drive could retrieve and summarize a company’s annual performance review stored in a PDF document.

Real-time data streams from IoT devices, analytics tools, or social media platforms offer actionable insights that are constantly updated. AI agents integrated with streaming data sources can monitor metrics, such as energy usage from IoT sensors or engagement rates from Twitter analytics, and make recommendations or trigger actions based on live updates.

APIs from third-party services like payment gateways (Stripe, PayPal), logistics platforms (DHL, FedEx), and HR systems (BambooHR, Workday) expand the agent's ability to act across verticals. For example, an AI agent integrated with a payment gateway could automatically reconcile invoices, track payments, and even issue alerts for overdue accounts.

To process this vast array of data, AI agents rely on data ingestion—the process of collecting, aggregating, and transforming raw data into a usable format. Data ingestion pipelines ensure that the agent has access to a broad and rich understanding of the information landscape, enhancing its ability to make accurate decisions.

However, this capability requires robust integrations with a wide variety of third-party applications. Whether it's CRM systems, analytics tools, or knowledge repositories, each integration provides an additional layer of context that the agent can leverage.

Without these integrations, AI agents would be confined to static or siloed information, limiting their ability to adapt to dynamic environments. For example, an AI-powered customer service bot lacking integration with an order management system might struggle to provide real-time updates on a customer’s order status, resulting in a frustrating user experience.

In many applications, the true value of AI agents lies in their ability to respond with real-time or near-real-time accuracy. Integrations with webhooks and streaming APIs enable the agent to access live data updates, ensuring that its responses remain relevant and timely.

Consider a scenario where an AI-powered invoicing assistant is tasked with generating invoices based on software usage. If the agent relies on a delayed data sync, it might fail to account for a client’s excess usage in the final moments before the invoice is generated. This oversight could result in inaccurate billing, financial discrepancies, and strained customer relationships.

Integrations are not merely a way to access data for AI agents; they are critical to enabling these agents to take meaningful actions on behalf of other applications. This capability is what transforms AI agents from passive data collectors into active participants in business processes.

Integrations play a crucial role in this process by connecting AI agents with different applications, enabling them to interact seamlessly and perform tasks on behalf of the user to trigger responses, updates, or actions in real time.

For instance, A customer service AI agent integrated with CRM platforms can automatically update customer records, initiate follow-up emails, and even generate reports based on the latest customer interactions. SImilarly, if a popular product is running low, the AI agent for e-commerce platform can automatically reorder from the supplier, update the website’s product page with new availability dates, and notify customers about upcoming restocks. Furthermore, A marketing AI agent integrated with CRM and marketing automation platforms (e.g., Mailchimp, ActiveCampaign) can automate email campaigns based on customer behaviors—such as opening specific emails, clicking on links, or making purchases.

Integrations allow AI agents to automate processes that span across different systems. For example, an AI agent integrated with a project management tool and a communication platform can automate task assignments based on project milestones, notify team members of updates, and adjust timelines based on real-time data from work management systems.

For developers driving these integrations, it’s essential to build robust APIs and use standardized protocols like OAuth for secure data access across each of the applications in use. They should also focus on real-time synchronization to ensure the AI agent acts on the most current data available. Proper error handling, logging, and monitoring mechanisms are critical to maintaining reliability and performance across integrations. Furthermore, as AI agents often interact with multiple platforms, developers should design integration solutions that can scale. This involves using scalable data storage solutions, optimizing data flow, and regularly testing integration performance under load.

Retrieval-Augmented Generation (RAG) is a transformative approach that enhances the capabilities of AI agents by addressing a fundamental limitation of generative AI models: reliance on static, pre-trained knowledge. RAG fills this gap by providing a way for AI agents to efficiently access, interpret, and utilize information from a variety of data sources. Here’s how iintegrations help in building RAG pipelines for AI agents:

Traditional APIs are optimized for structured data (like databases, CRMs, and spreadsheets). However, many of the most valuable insights for AI agents come from unstructured data—documents (PDFs), emails, chats, meeting notes, Notion, and more. Unstructured data often contains detailed, nuanced information that is not easily captured in structured formats.

RAG enables AI agents to access and leverage this wealth of unstructured data by integrating it into their decision-making processes. By integrating with these unstructured data sources, AI agents:

RAG involves not only the retrieval of relevant data from these sources but also the generation of responses based on this data. It allows AI agents to pull in information from different platforms, consolidate it, and generate responses that are contextually relevant.

For instance, an HR AI agent might need to pull data from employee records, performance reviews, and onboarding documents to answer a question about benefits. RAG enables this agent to access the necessary context and background information from multiple sources, ensuring the response is accurate and comprehensive through a single retrieval mechanism.

RAG empowers AI agents by providing real-time access to updated information from across various platforms with the help of Webhooks. This is critical for applications like customer service, where responses must be based on the latest data.

For example, if a customer asks about their recent order status, the AI agent can access real-time shipping data from a logistics platform, order history from an e-commerce system, and promotional notes from a marketing database—enabling it to provide a response with the latest information. Without RAG, the agent might only be able to provide a generic answer based on static data, leading to inaccuracies and customer frustration.

While RAG presents immense opportunities to enhance AI capabilities, its implementation comes with a set of challenges. Addressing these challenges is crucial to building efficient, scalable, and reliable AI systems.

Integration of an AI-powered customer service agent with CRM systems, ticketing platforms, and other tools can help enhance contextual knowledge and take proactive actions, delivering a superior customer experience.

For instance, when a customer reaches out with a query—such as a delayed order—the AI agent retrieves their profile from the CRM, including past interactions, order history, and loyalty status, to gain a comprehensive understanding of their background. Simultaneously, it queries the ticketing system to identify any related past or ongoing issues and checks the order management system for real-time updates on the order status. Combining this data, the AI develops a holistic view of the situation and crafts a personalized response. It may empathize with the customer’s frustration, offer an estimated delivery timeline, provide goodwill gestures like loyalty points or discounts, and prioritize the order for expedited delivery.

The AI agent also performs critical backend tasks to maintain consistency across systems. It logs the interaction details in the CRM, updating the customer’s profile with notes on the resolution and any loyalty rewards granted. The ticketing system is updated with a resolution summary, relevant tags, and any necessary escalation details. Simultaneously, the order management system reflects the updated delivery status, and insights from the resolution are fed into the knowledge base to improve responses to similar queries in the future. Furthermore, the AI captures performance metrics, such as resolution times and sentiment analysis, which are pushed into analytics tools for tracking and reporting.

In retail, AI agents can integrate with inventory management systems, customer loyalty platforms, and marketing automation tools for enhancing customer experience and operational efficiency. For instance, when a customer purchases a product online, the AI agent quickly retrieves data from the inventory management system to check stock levels. It can then update the order status in real time, ensuring that the customer is informed about the availability and expected delivery date of the product. If the product is out of stock, the AI agent can suggest alternatives that are similar in features, quality, or price, or provide an estimated restocking date to prevent customer frustration and offer a solution that meets their needs.

Similarly, if a customer frequently purchases similar items, the AI might note this and suggest additional products or promotions related to these interests in future communications. By integrating with marketing automation tools, the AI agent can personalize marketing campaigns, sending targeted emails, SMS messages, or notifications with relevant offers, discounts, or recommendations based on the customer’s previous interactions and buying behaviors. The AI agent also writes back data to customer profiles within the CRM system. It logs details such as purchase history, preferences, and behavioral insights, allowing retailers to gain a deeper understanding of their customers’ shopping patterns and preferences.

Integrating AI (Artificial Intelligence) and RAG (Recommendations, Actions, and Goals) frameworks into existing systems is crucial for leveraging their full potential, but it introduces significant technical challenges that organizations must navigate. These challenges span across data ingestion, system compatibility, and scalability, often requiring specialized technical solutions and ongoing management to ensure successful implementation.

Adding integrations to AI agents involves providing these agents with the ability to seamlessly connect with external systems, APIs, or services, allowing them to access, exchange, and act on data. Here are the top ways to achieve the same:

Custom development involves creating tailored integrations from scratch to connect the AI agent with various external systems. This method requires in-depth knowledge of APIs, data models, and custom logic. The process involves developing specific integrations to meet unique business requirements, ensuring complete control over data flows, transformations, and error handling. This approach is suitable for complex use cases where pre-built solutions may not suffice.

Embedded iPaaS (Integration Platform as a Service) solutions offer pre-built integration platforms that include no-code or low-code tools. These platforms allow organizations to quickly and easily set up integrations between the AI agent and various external systems without needing deep technical expertise. The integration process is simplified by using a graphical interface to configure workflows and data mappings, reducing development time and resource requirements.

Unified API solutions provide a single API endpoint that connects to multiple SaaS products and external systems, simplifying the integration process. This method abstracts the complexity of dealing with multiple APIs by consolidating them into a unified interface. It allows the AI agent to access a wide range of services, such as CRM systems, marketing platforms, and data analytics tools, through a seamless and standardized integration process.

Knit offers a game-changing solution for organizations looking to integrate their AI agents with a wide variety of SaaS applications quickly and efficiently. By providing a seamless, AI-driven integration process, Knit empowers businesses to unlock the full potential of their AI agents by connecting them with the necessary tools and data sources.

By integrating with Knit, organizations can power their AI agents to interact seamlessly with a wide array of applications. This capability not only enhances productivity and operational efficiency but also allows for the creation of innovative use cases that would be difficult to achieve with manual integration processes. Knit thus transforms how businesses utilize AI agents, making it easier to harness the full power of their data across multiple platforms.

Ready to see how Knit can transform your AI agents? Contact us today for a personalized demo!

.png)

In our earlier posts, we explored the fundamentals of the Model Context Protocol (MCP), what it is, how it works, and the underlying architecture that powers it. We've walked through how MCP enables standardized communication between AI agents and external tools, how the protocol is structured for extensibility, and what an MCP server looks like under the hood.

But a critical question remains: Why does MCP matter?

Why are AI researchers, developers, and platform architects buzzing about this protocol? Why are major players in the AI space rallying around MCP as a foundational building block? Why should developers, product leaders, and enterprise stakeholders pay attention?

This blog dives deep into the “why” It will reveal how MCP addresses some of the most pressing limitations in AI systems today and unlocks a future of more powerful, adaptive, and useful AI applications.

One of the biggest pain points in the AI tooling ecosystem has been integration fragmentation. Every time an AI product needs to connect to a different application, whether Google Drive, Slack, Jira, or Salesforce, it typically requires building a custom integration with proprietary APIs.

MCP changes this paradigm.

This means time savings, scalability, and sustainability in how AI systems are built and maintained.

Unlike traditional systems where available functions are pre-wired, MCP empowers AI agents with dynamic discovery capabilities at runtime.

This level of adaptability makes MCP-based systems far easier to maintain, extend, and evolve.

AI agents, especially those based on LLMs, are powerful language processors but they're often context-blind.

They don’t know what document you’re working on, which tickets are open in your helpdesk tool, or what changes were made to your codebase yesterday, unless you explicitly tell them.

In short, MCP helps bridge the gap between static knowledge and situational awareness.

MCP empowers AI agents to not only understand but also take action, pushing the boundary from “chatbot” to autonomous task agent.

This shifts AI from a passive advisor to an active partner in digital workflows, unlocking higher productivity and automation.

Unlike proprietary plugins or closed API ecosystems, MCP is being developed as an open standard, with backing from the broader AI and open-source communities. Platforms like LangChain, OpenAgents, and others are already building tooling and integrations on top of MCP.

This collaborative model fosters a network effect i.e. the more tools support MCP, the more valuable and versatile the ecosystem becomes.

MCP’s value proposition isn’t just theoretical; it translates into concrete benefits for users, developers, and organizations alike.

MCP-powered AI assistants can integrate seamlessly with tools users already rely on, Google Docs, Jira, Outlook, and more. The result? Smarter, more personalized, and more useful AI experiences.

Example: Ask your AI assistant,

“Summarize last week’s project notes and schedule a review with the team.”

With MCP-enabled tool access, the assistant can:

All without you needing to lift a finger.

Building AI applications becomes faster and simpler. Instead of hard-coding integrations, developers can rely on reusable MCP servers that expose functionality via a common protocol.

This lets developers:

Organizations benefit from:

MCP allows large-scale systems to evolve with confidence.

By creating a shared method for handling context, actions, and permissions, MCP adds order to the chaos of AI-tool interactions.

MCP is more than a technical protocol, it’s a step toward autonomous, agent-driven computing.

Imagine agents that:

From smart scheduling to automated reporting, from customer support bots that resolve issues end-to-end to research assistants that can scour data sources and summarize insights, MCP is the backbone that enables this reality.

MCP isn’t just another integration protocol. It’s a revolution in how AI understands, connects with, and acts upon the world around it.

It transforms AI from static, siloed interfaces into interoperable, adaptable, and deeply contextual digital agents, the kind we need for the next generation of computing.

Whether you’re building AI applications, leading enterprise transformation, or exploring intelligent assistants for your own workflows, understanding and adopting MCP could be one of the smartest strategic decisions you make this decade.

1. How does MCP improve AI agent interoperability?

MCP provides a common interface through which AI models can interact with various tools. This standardization eliminates the need for bespoke integrations and enables cross-platform compatibility.

2. Why is dynamic tool discovery important in AI applications?

It allows AI agents to automatically detect and integrate new tools at runtime, making them adaptable without requiring code changes or redeployment.

3. What makes MCP different from traditional API integrations?

Traditional integrations are static and bespoke. MCP is modular, reusable, and designed for runtime discovery and standardized interaction.

4. How does MCP help make AI more context-aware?

MCP enables real-time access to live data and environments, so AI can understand and act based on current user activity and workflow context.

5. What’s the advantage of MCP for enterprise IT teams?

Enterprises gain governance, scalability, and resilience from MCP’s standardized and vendor-neutral approach, making system maintenance and upgrades easier.

6. Can MCP reduce development effort for new AI features?

Absolutely. MCP servers can be reused across applications, reducing the need to rebuild connectors and enabling rapid prototyping.

7. Does MCP support real-time action execution?

Yes. MCP allows AI agents to execute actions like sending emails or updating databases, directly through connected tools.

8. How does MCP foster innovation?

By lowering the barrier to integration, MCP encourages more developers to experiment and build, accelerating innovation in AI-powered services.

9. What are the security benefits of MCP?

MCP allows for controlled access to tools and data, with permission scopes and context-aware governance for safer deployments.

10. Who benefits most from MCP adoption?

Developers, end users, and enterprises all benefit, through faster build cycles, richer AI experiences, and more manageable infrastructures.

.png)

As SaaS adoption soars, integrations have become critical. Building and managing them in-house is resource-heavy. That’s where unified APIs come in, offering 1:many integrations and drastically simplifying integration development. Merge.dev has emerged as a popular solution, but it's far from the only one. If you're searching for Merge API competitors, this guide dives deep into the top alternatives—starting with Knit, the security-first unified API.

Merge.dev provides a unified API to integrate multiple apps in the same category—like HRIS or ATS—with one connector. Key benefits include:

However, it’s not without pain points:

While Merge.dev is a strong contender, several alternatives address its limitations and offer unique advantages. Here are some of the top Merge API competitors:

Knit is a standout among Merge API competitors. It’s purpose-built for businesses that care about data security, flexibility, and real-time sync.

Unlike Merge, Knit does not store any customer data. All data requests are pass-through. Merge stores and serves data from cache under the guise of differential syncing. Knit offers the same differential capabilities—without compromising on privacy.

Knit gives your end users granular control over what data gets shared during integration. Users can toggle scopes directly from the auth component—an industry-first feature.

Merge struggles when your use case doesn’t fit its common models, pushing you toward complex passthroughs. Knit’s AI Connector Builder builds a custom connector instantly.

Merge locks essential features and support behind premium tiers. Knit’s transparent pricing (starts at $4,800/year) gives you more capabilities and better support—at a lower cost.

Merge requires polling or relies on unreliable webhooks. Knit uses pure push-based data sync. You get guaranteed data delivery at scale, with a 99.99% SLA.

Knit uses a JavaScript SDK—not an iframe. You can fully customize UI, branding, and even email templates to match your product experience.

Knit lets you:

Knit offers more vertical and horizontal coverage than Merge:

From deep RCA tools to logs and dashboards, Knit empowers CX teams to manage syncs without engineering support.

You’re not limited to a common model. Knit supports mapping custom fields and controlling granular read/write permissions.

Best for: HRIS & Payroll integrations

Pricing: ~$600/account/year (limited features)

Best for: Broad API category coverage

Pricing: Starts at $299/month with 10K API call limit

Best for: SaaS companies needing HRIS/ATS integrations

Pricing: $1200+/month + per-customer fees

Best for: AI-powered integration framework

Pricing: $1200+/month + per-customer fees

While every tool has its strengths, Knit is the only Merge.dev competitor that:

If you're serious about secure, scalable, and cost-effective integrations, Knit is the best Merge API alternative for your SaaS product. Get in touch today to learn more!

.png)

You've equipped your AI agent with knowledge using Retrieval-Augmented Generation (RAG) and the ability to perform actions using Tool Calling. These are fundamental building blocks. However, many real-world enterprise tasks aren't simple, single-step operations. They often involve complex sequences, multiple applications, conditional logic, and sophisticated data manipulation.

Consider onboarding a new employee: it might involve updating the HR system, provisioning IT access across different platforms, sending welcome emails, scheduling introductory meetings, and adding the employee to relevant communication channels. A simple loop of "think-act-observe" might be inefficient or insufficient for such multi-stage processes.

This is where advanced integration patterns and workflow orchestration become crucial. These techniques provide structure and intelligence to manage complex interactions, enabling AI agents to tackle sophisticated, multi-step tasks autonomously and efficiently.

This post explores key advanced patterns beyond basic RAG and Tool Calling, including handling multiple app instances, orchestrating multi-tool sequences, specialized agent roles, and emerging standards.

Return to our main guide: The Ultimate Guide to Integrating AI Agents in Your Enterprise | Builds upon basic actions: Empowering AI Agents to Act: Mastering Tool Calling & Function Execution

A common scenario involves needing the AI agent to interact with multiple instances of the same type of application. For example, a sales agent might need to access both the company's primary Salesforce instance and a secondary HubSpot CRM used by a specific division. How do you configure the agent to handle this?

There are two primary approaches:

The best approach depends on whether explicit control over instance selection or seamless abstraction is more important for the specific use case.

For tasks requiring a sequence of actions with dependencies, more structured orchestration methods are needed than the basic observe-plan-act loop (like the ReAct pattern). These methods aim to improve efficiency, reliability, and reduce redundant LLM calls.

This pattern decouples planning from execution.

ReWOO aims to optimize planning further by structuring tasks upfront without necessarily waiting for intermediate results, potentially reducing latency and token usage.

This approach focuses on maximum acceleration by executing tasks eagerly within a graph structure, minimizing LLM interactions.

Frameworks supporting these patterns are discussed here: Navigating the AI Agent Integration Landscape: Key Frameworks & Tools | Complexity introduces challenges: Overcoming the Hurdles: Common Challenges in AI Agent Integration (& Solutions)

Beyond general task execution, agents can be designed for specific advanced functions:

As the need for seamless integration grows, efforts are underway to standardize how AI models interact with external data and tools. The Model Context Protocol (MCP) is one such emerging open standard.

While promising for the future, MCP requires further development and adoption before becoming a widespread solution for enterprise integration challenges.

Mastering basic RAG and Tool Calling is just the beginning. To tackle the complex, multi-faceted tasks common in enterprise environments, developers must leverage advanced integration patterns and orchestration techniques. Whether it's managing connections to multiple CRM instances, structuring complex workflows using Plan-and-Execute or ReWOO, or designing specialized data enrichment agents, these advanced methods unlock a higher level of AI capability. By understanding and applying these patterns, you can build AI agents that are not just knowledgeable and active, but truly strategic assets capable of navigating intricate business processes autonomously and efficiently.

.png)

Artificial Intelligence (AI) agents are rapidly moving beyond futuristic concepts to become powerful, practical tools within the modern enterprise. These intelligent software entities can automate complex tasks, understand natural language, make decisions, and interact with digital environments with increasing autonomy. From streamlining customer service with intelligent chatbots to optimizing supply chains and accelerating software development, AI agents promise unprecedented gains in efficiency, innovation, and personalized experiences.

However, the true transformative power of an AI agent isn't just in its inherent intelligence; it's in its connectivity. An AI agent operating in isolation is like a brilliant mind locked in a room – full of potential but limited in impact. To truly revolutionize workflows and deliver significant business value, AI agents must be seamlessly integrated with the vast ecosystem of applications, data sources, and digital tools that power your organization.

This guide provides a comprehensive overview of AI agent integration, exploring why it's essential and introducing the core concepts you need to understand. We'll touch upon:

Think of this as your starting point – your map to navigating the exciting landscape of enterprise AI agent integration.

The demand for sophisticated AI agents stems from their ability to perform tasks that previously required human intervention. But to act intelligently, they need two fundamental things that only integration can provide: contextual knowledge and the ability to take action.

AI models, including those powering agents, are often trained on vast but ultimately static datasets. While this provides a broad base of knowledge, it quickly becomes outdated and lacks the specific, dynamic context of your business environment. Real-world effectiveness requires access to:

Integration bridges this gap. By connecting AI agents to your databases, CRMs, ERPs, document repositories, and collaboration tools, you empower them with the up-to-the-minute, specific context needed to provide relevant answers, make informed decisions, and personalize interactions. Techniques like Retrieval-Augmented Generation (RAG) are key here, allowing agents to fetch relevant information from connected sources before generating a response.

Dive deeper into how RAG works in our dedicated post: Unlocking AI Knowledge: A Deep Dive into Retrieval-Augmented Generation (RAG)

Understanding context is only half the battle. The real magic happens when AI agents can act on that understanding within your existing workflows. This means moving beyond simply answering questions to actively performing tasks like:

This capability, often enabled through Tool Calling or Function Calling, allows agents to interact directly with the APIs of other applications. By granting agents controlled access to specific "tools" (functions within other software), you transform them from passive information providers into active participants in your business processes. Imagine an agent not just identifying a sales lead but also automatically adding it to the CRM and scheduling a follow-up task. That's the power of action-oriented integration.

Learn how to empower your agents to act: Empowering AI Agents to Act: Mastering Tool Calling & Function Execution

While there are nuances and advanced techniques, most AI agent integration strategies revolve around the two core concepts mentioned above:

These two methods often work hand-in-hand. An agent might use RAG to gather information about a customer's issue from various sources and then use Tool Calling to update the support ticket in the helpdesk system.

Integrating AI agents isn't always straightforward. Organizations typically face several hurdles:

Explore these challenges in detail and learn how to overcome them: Overcoming the Hurdles: Common Challenges in AI Agent Integration (& Solutions)

As agents become more sophisticated, integration patterns evolve. We're seeing the rise of:

Discover advanced techniques: Orchestrating Complex AI Workflows: Advanced Integration Patterns and explore frameworks: Navigating the AI Agent Integration Landscape: Key Frameworks & Tools

AI agents represent a significant leap forward in automation and intelligent interaction. But their success within your enterprise hinges critically on thoughtful, robust integration. By connecting agents to your unique data landscape and empowering them to act within your existing workflows, you move beyond novelty AI to create powerful tools that drive real business outcomes.

While challenges exist, the methodologies, frameworks, and tools available are rapidly maturing. Understanding the core principles of RAG for knowledge and Tool Calling for action, anticipating the common hurdles, and exploring advanced patterns will position you to harness the full, transformative potential of integrated AI agents.

Ready to dive deeper? Explore our cluster posts linked throughout this guide or check out our AI Agent Integration FAQ for answers to common questions.

.png)

We've explored the 'why' and 'how' of AI agent integration, delving into Retrieval-Augmented Generation (RAG) for knowledge, Tool Calling for action, advanced orchestration patterns, and the frameworks that bring it all together. But what does successful integration look like in practice? How are businesses leveraging connected AI agents to solve real problems and create tangible value?

Theory is one thing; seeing integrated AI agents performing complex tasks within specific business contexts truly highlights their transformative potential. This post examines concrete use cases, drawing from the examples in our source material, to illustrate how seamless integration enables AI agents to become powerful operational assets.

Return to our main guide: The Ultimate Guide to Integrating AI Agents in Your Enterprise

The Scenario: A customer contacts an online retailer via chat asking, "My order #12345 seems delayed, what's the status and when can I expect it?" A generic chatbot might offer a canned response or require the customer to navigate complex menus. An integrated AI agent can provide a much more effective and personalized experience.

The Integrated Systems: To handle this scenario effectively, the AI agent needs connections to multiple backend systems:

How the Integrated Agent Works:

The Benefits: Faster resolution times, significantly improved customer satisfaction through personalized and accurate information, reduced workload for human agents (freeing them for complex issues), consistent application of company policies, and valuable data logging for service improvement analysis.

Related: Unlocking AI Knowledge: A Deep Dive into Retrieval-Augmented Generation (RAG) | Empowering AI Agents to Act: Mastering Tool Calling & Function Execution

The Scenario: A customer browsing a retailer's website adds an item to their cart but sees an "Only 2 left in stock!" notification. They ask a chat agent, "Do you have more of this item coming soon, or is it available at the downtown store?"

The Integrated Systems: An effective retail AI agent needs connectivity beyond the website:

How the Integrated Agent Works:

The Benefits: Seamless omni-channel experience, reduced lost sales due to stockouts (by offering alternatives or notifications), improved inventory visibility for customers, increased engagement through personalized recommendations, enhanced customer data capture, and more efficient use of marketing tools.

These examples clearly demonstrate that the true value of AI agents in the enterprise comes from their ability to operate within the existing ecosystem of tools and data. Whether it's pulling real-time order status, checking multi-channel inventory, updating CRM records, or triggering marketing campaigns, integration is the engine that drives meaningful automation and intelligent interactions. By thoughtfully connecting AI agents to relevant systems using techniques like RAG and Tool Calling, businesses can move beyond simple chatbots to create sophisticated digital assistants that solve complex problems and deliver significant operational advantages. Think about your own business processes – where could an integrated AI agent make the biggest impact?

Facing hurdles? See common issues and solutions: Overcoming the Hurdles: Common Challenges in AI Agent Integration (& Solutions)

.png)

The Model Context Protocol (MCP) represents one of the most significant developments in enterprise AI integration. In our previous articles, we’ve unpacked the fundamentals of MCP, covering its core architecture, technical capabilities, advantages, limitations, and future roadmap. Now, we turn to the key strategic question facing enterprise leaders: should your organization adopt MCP today, or wait for the ecosystem to mature?

The stakes are particularly high because MCP adoption decisions affect not just immediate technical capabilities, but long-term architectural choices, vendor relationships, and competitive positioning. Organizations that adopt too early may face technical debt and security vulnerabilities, while those who wait too long risk falling behind competitors who successfully leverage MCP's advantages in AI-driven automation and decision-making.

This comprehensive guide provides enterprise decision-makers with a strategic framework for evaluating MCP adoption timing, examining real-world implementation challenges, and understanding the protocol's potential return on investment.

The decision to adopt MCP now versus waiting should be based on a systematic evaluation of organizational context, technical requirements, and strategic objectives. This framework provides structure for making this critical decision:

Several scenarios strongly favor immediate MCP adoption, particularly when the benefits clearly outweigh the associated risks and implementation challenges.

Early MCP adopters can capture several strategic advantages that may be difficult to achieve later:

Organizations choosing early adoption can implement several strategies to mitigate associated risks:

Despite MCP's promising capabilities, several scenarios strongly suggest waiting for greater maturity before implementation.

The rapid pace of MCP development, while exciting, creates stability concerns for enterprise adoption:

For many organizations, neither immediate full adoption nor complete deferral represents the optimal approach. A gradual, phased adoption strategy can balance innovation opportunities with risk management:

Organizations can implement MCP selectively, focusing on areas where benefits are clearest while maintaining existing solutions elsewhere:

Even organizations not immediately implementing MCP can prepare for eventual adoption:

Successful MCP implementation requires careful planning and foundation building:

The pilot phase focuses on learning and capability building:

Production deployment requires careful scaling and risk management:

Long-term success requires continuous improvement and scaling:

The decision to adopt MCP now versus waiting requires careful consideration of multiple factors that vary significantly across organizations and use cases. This is not a binary choice between immediate adoption and indefinite delay, but rather a strategic decision that should be based on specific organizational context, risk tolerance, and business objectives.

Based on current market conditions and technology maturity, we recommend the following timeline considerations:

The Model Context Protocol represents a significant evolution in AI integration capabilities that will likely become a standard part of the enterprise technology stack. The question is not whether to adopt MCP, but when and how to do so strategically.

Organizations should begin preparing for MCP adoption now, even if they choose not to implement it immediately. This preparation includes developing relevant expertise, establishing security frameworks, evaluating vendor options, and identifying priority use cases. This approach ensures readiness when implementation timing becomes optimal for their specific situation.

1. What is the minimum technical expertise required for MCP implementation?

MCP implementation requires expertise in several technical areas: protocol design and JSON-RPC communication, AI integration and agent development, modern security practices including authentication and authorization, and cloud infrastructure management.

2. How does MCP compare to OpenAI's function calling in terms of capabilities and limitations?

MCP and OpenAI's function calling serve similar purposes but differ significantly in approach. OpenAI's function calling is platform-specific, operates on a per-request basis, and requires predefined function schemas. MCP is model-agnostic, maintains persistent connections, and enables dynamic tool discovery. MCP provides greater flexibility and standardization but requires more complex infrastructure. Organizations heavily invested in OpenAI platforms might prefer function calling for simplicity, while those needing multi-platform AI integration benefit more from MCP.

3. Can MCP integrate with existing enterprise identity management systems?

MCP integration with enterprise identity management is possible but challenging with current implementations. The protocol supports OAuth 2.1, but integration with enterprise SSO systems, Active Directory, and identity governance platforms often requires custom development. The MCP roadmap includes enterprise-managed authorization features that will improve this integration. Organizations should plan for custom authentication layers until these enterprise features mature.

4. What is the typical return on investment timeline for MCP adoption?

ROI timelines vary significantly based on use case complexity and implementation scope. Organizations with complex multi-system integration requirements typically see break-even periods of 18-24 months, with benefits accelerating as additional integrations are implemented. Simple use cases may achieve ROI within 6-12 months, while enterprise-wide deployments may require 2-3 years to fully realize benefits. The key factors affecting ROI are integration complexity, development expertise, and scale of deployment.

5. What are the implications of MCP adoption for existing AI and integration investments?

MCP adoption doesn't necessarily obsolete existing investments. Organizations can implement MCP for new projects while maintaining existing integrations until they require updates. The key is designing abstraction layers that enable gradual migration to MCP without disrupting working systems. Legacy integrations can coexist with MCP implementations, and some traditional APIs may be more appropriate for certain use cases than MCP.

6. How does MCP adoption affect compliance with data protection regulations?

MCP compliance with regulations like GDPR, HIPAA, and SOX requires careful implementation of data handling, audit logging, and access controls. Current MCP implementations often lack comprehensive compliance features, requiring custom development. Organizations in regulated industries should wait for more mature compliance frameworks or implement comprehensive custom controls. Key requirements include data processing transparency, audit trails, user consent management, and data breach notification capabilities.

7. What are the recommended approaches for training technical teams on MCP?

MCP training should cover protocol fundamentals, security best practices, implementation patterns, and operational procedures. Start with foundational training on JSON-RPC, AI integration concepts, and modern security practices. Provide hands-on experience with pilot projects and vendor solutions. Engage with the MCP community through documentation, forums, and open source projects. Consider vendor training programs and professional services for enterprise deployments. Maintain ongoing education as the protocol evolves.

8. How should organizations prepare for MCP adoption without immediate implementation?

Organizations can prepare for MCP adoption by developing relevant technical expertise, implementing compatible security frameworks, designing modular architectures that facilitate future migration, evaluating vendor options and establishing relationships, and identifying priority use cases and business requirements. This preparation reduces implementation risks and accelerates deployment when timing becomes optimal.

9. What are the disaster recovery and business continuity implications of MCP adoption?

MCP disaster recovery requires planning for server availability, connection recovery, and data consistency across distributed systems. The persistent connection model creates different failure modes than stateless APIs. Organizations should implement comprehensive monitoring, automated failover capabilities, and connection recovery mechanisms. Business continuity planning should address scenarios where MCP servers become unavailable and how AI systems will operate in degraded modes.