ATS Integration : An In-Depth Guide With Key Concepts And Best Practices

Read more

Read more

All the hot and popular Knit API resources

.webp)

Sage 200 is a comprehensive business management solution designed for medium-sized enterprises, offering strong accounting, CRM, supply chain management, and business intelligence capabilities. Its API ecosystem enables developers to automate critical business operations, synchronize data across systems, and build custom applications that extend Sage 200's functionality.

The Sage 200 API provides a structured, secure framework for integrating with external applications, supporting everything from basic data synchronization to complex workflow automation.

In this blog, you'll learn how to integrate with the Sage 200 API, from initial setup, authentication, to practical implementation strategies and best practices.

Sage 200 serves as the operational backbone for growing businesses, providing end-to-end visibility and control over business processes.

Sage 200 has become essential for medium-sized enterprises seeking integrated business management by providing a unified platform that connects all operational areas, enabling data-driven decision-making and streamlined processes.

Sage 200 breaks down departmental silos by connecting finance, sales, inventory, and operations into a single system. This integration eliminates duplicate data entry, reduces errors, and provides a 360-degree view of business performance.

Designed for growing businesses, Sage 200 scales with organizational needs, supporting multiple companies, currencies, and locations. Its modular structure allows businesses to start with core financials and add capabilities as they expand.

With built-in analytics and customizable dashboards, Sage 200 provides immediate insights into key performance indicators, cash flow, inventory levels, and customer behavior, empowering timely business decisions.

Sage 200 includes features for tax compliance, audit trails, and financial reporting standards, helping businesses meet regulatory requirements across different jurisdictions and industries.

Through its API and development tools, Sage 200 can be tailored to specific industry needs and integrated with specialized applications, providing flexibility without compromising core functionality.

Before integrating with the Sage 200 API, it's important to understand key concepts that define how data access and communication work within the Sage ecosystem.

The Sage 200 API enables businesses to connect their ERP system with e-commerce platforms, CRM systems, payment gateways, and custom applications. These integrations automate workflows, improve data accuracy, and create seamless operational experiences.

Below are some of the most impactful Sage 200 integration scenarios and how they can transform your business processes.

Online retailers using platforms like Shopify, Magento, or WooCommerce need to synchronize orders, inventory, and customer data with their ERP system. By integrating your e-commerce platform with Sage 200 API, orders can flow automatically into Sage for processing, fulfillment, and accounting.

How It Works:

Sales teams using CRM systems like Salesforce or Microsoft Dynamics need access to customer financial data, order history, and credit limits. Integrating CRM with Sage 200 ensures sales representatives have complete customer visibility.

How It Works:

Manufacturing and distribution companies need to coordinate with suppliers through procurement portals or vendor management systems. Sage 200 API integration automates purchase order creation, goods receipt, and supplier payment processes.

How It Works:

Organizations with multiple subsidiaries or complex group structures need consolidated financial reporting. Sage 200 API enables automated data extraction for consolidation tools and business intelligence platforms.

How It Works:

Field sales and service teams need mobile access to customer data, inventory availability, and order processing capabilities. Sage 200 API powers mobile applications for on-the-go business operations.

How It Works:

Financial teams spend significant time matching bank transactions with accounting entries. Integrating banking platforms with Sage 200 automates this process, improving accuracy and efficiency.

How It Works:

Sage 200 API uses token-based authentication to secure access to business data:

Implementation examples and detailed configuration are available in the Sage 200 Authentication Guide.

Before making API requests, you need to obtain authentication credentials. Sage 200 supports multiple authentication methods depending on your deployment (cloud or on-premise) and integration requirements.

Step 1: Register your application in the Sage Developer Portal. Create a new application and note your Client ID and Client Secret.

Step 2: Configure OAuth 2.0 redirect URIs and requested scopes based on the data your application needs to access.

Step 3: Implement the OAuth 2.0 authorization code flow:

Step 4: Refresh tokens automatically before expiry to maintain seamless access.

Step 1: Enable web services in the Sage 200 system administration and configure appropriate security settings.

Step 2: Use basic authentication or Windows authentication, depending on your security configuration:

Authorization: Basic {base64_encoded_credentials}

Step 3: For SOAP services, configure WS-Security headers as required by your deployment.

Step 4: Test connectivity using Sage 200's built-in web service test pages before proceeding with custom development.

Detailed authentication guides are available in the Sage 200 Authentication Documentation.

IIntegrating with the Sage 200 API may seem complex at first, but breaking the process into clear steps makes it much easier. This guide walks you through everything from registering your application to deploying it in production. It focuses mainly on Sage 200 Standard (cloud), which uses OAuth 2.0 and has the API enabled by default, with notes included for Sage 200 Professional (on-premise or hosted) where applicable.

Before making any API calls, you need to register your application with Sage to get a Client ID (and Client Secret for web/server applications).

Step 1: Submit the official Sage 200 Client ID and Client Secret Request Form.

Step 2: Sage will process your request (typically within 72 hours) and email you the Client ID and Client Secret (for confidential clients).

Step 3: Store these credentials securely, never expose the Client Secret in client-side code.

✅ At this stage, you have the credentials needed for authentication.

Sage 200 uses OAuth 2.0 Authorization Code Flow with Sage ID for secure, token-based access.

Steps to Implement the Flow:

1. Redirect User to Authorization Endpoint (Ask for Permission):

GET https://id.sage.com/authorize?

audience=s200ukipd/sage200&

client_id={YOUR_CLIENT_ID}&

response_type=code&

redirect_uri={YOUR_REDIRECT_URI}&

scope=openid%20profile%20email%20offline_access&

state={RANDOM_STATE_STRING}2. User logs in with their Sage ID and consents to access.

3. Sage redirects back to your redirect_uri with a code:

{YOUR_REDIRECT_URI}?code={AUTHORIZATION_CODE}&state={YOUR_STATE}4. Exchange Code for Tokens:

POST https://id.sage.com/oauth/token

Content-Type: application/x-www-form-urlencoded

client_id={YOUR_CLIENT_ID}

&client_secret={YOUR_CLIENT_SECRET} // Only for confidential clients

&redirect_uri={YOUR_REDIRECT_URI}

&code={AUTHORIZATION_CODE}

&grant_type=authorization_code5. Refresh Token When Needed:

POST https://id.sage.com/oauth/token

Content-Type: application/x-www-form-urlencoded

client_id={YOUR_CLIENT_ID}

&client_secret={YOUR_CLIENT_SECRET}

&refresh_token={YOUR_REFRESH_TOKEN}

&grant_type=refresh_tokenSage 200 organizes data by sites and companies. You need their IDs for most requests.

Steps:

1. Call the sites endpoint (no X-Site/X-Company headers needed here):

Headers:

Authorization: Bearer {ACCESS_TOKEN}

Content-Type: application/json2. Response lists available sites with site_id, site_name, company_id, etc. Note the ones you need.

Sage 200 API is fully RESTful with OData v4 support for querying.

Key Features:

No SOAP Support in Current API - It's all modern REST/JSON.

All requests require:

Authorization: Bearer {ACCESS_TOKEN}

X-Site: {SITE_ID}

X-Company: {COMPANY_ID}

Content-Type: application/jsonUse Case 1: Fetching Customers (GET)

GET https://api.columbus.sage.com/uk/sage200/accounts/v1/customers?$top=10Response Example (Partial):

[

{

"id": 27828,

"reference": "ABS001",

"name": "ABS Garages Ltd",

"balance": 2464.16,

...

}

]Use Case 2: Creating a Customer (POST)

POST https://api.columbus.sage.com/uk/sage200/accounts/v1/customers

Body:

{

"reference": "NEW001",

"name": "New Customer Ltd",

"short_name": "NEW001",

"credit_limit": 5000.00,

...

}Success: Returns 201 Created with the new customer object.

1. Use Development Credentials from your registration.

2. Test with a demo or non-production site (request via your Sage partner if needed).

3. Tools:

4. Test scenarios: Create/read/update/delete key entities (customers, orders), error handling, token refresh.

5. Monitor responses for errors (e.g., 401 for invalid token).

Building reliable Sage 200 integrations requires understanding platform capabilities and limitations. Following these best practices ensures optimal performance and maintainability.

Sage 200 APIs have practical limits on data volume per request. For large data transfers:

Implement robust error handling:

Ensure data consistency between systems:

Protect sensitive business data:

Choose the right approach for each integration scenario:

Integrating directly with Sage 200 API requires handling complex authentication, data mapping, error handling, and ongoing maintenance. Knit simplifies this by providing a unified integration platform that connects your application to Sage 200 and dozens of other business systems through a single, standardized API.

Instead of writing separate integration code for each ERP system (Sage 200, SAP Business One, Microsoft Dynamics, NetSuite), Knit provides a single Unified ERP API. Your application connects once to Knit and can instantly work with multiple ERP systems without additional development.

Knit automatically handles the differences between systems—different authentication methods, data models, API conventions, and business rules—so you don't have to.

Sage 200 authentication varies by deployment (cloud vs. on-premise) and requires ongoing token management. Knit's pre-built Sage 200 connector handles all authentication complexities:

Your application interacts with a simple, consistent authentication API regardless of the underlying Sage 200 configuration.

Every ERP system has different data models. Sage 200's customer structure differs from SAP's, which differs from NetSuite's. Knit solves this with a Unified Data Model that normalizes data across all supported systems.

When you fetch customers from Sage 200 through Knit, they're automatically transformed into a consistent schema. When you create an order, Knit transforms it from the unified model into Sage 200's specific format. This eliminates the need for custom mapping logic for each integration.

Polling Sage 200 for changes is inefficient and can impact system performance. Knit provides real-time webhooks that notify your application immediately when data changes in Sage 200:

This event-driven approach ensures your application always has the latest data without constant polling.

Building and maintaining a direct Sage 200 integration typically takes months of development and ongoing maintenance. With Knit, you can build a complete integration in days:

Your team can focus on core product functionality instead of integration maintenance.

A. Sage 200 provides API support for both cloud and on-premise versions. The cloud API is generally more feature-rich and follows standard REST/OData patterns. On-premise versions may have limitations based on the specific release.

A. Yes, Sage 200 supports webhooks for certain events, particularly in cloud deployments. You can subscribe to notifications for created, updated, or deleted records. Configuration is done through the Sage 200 administration interface or API. Not all object types support webhooks, so check the specific documentation for your requirements.

A. Sage 200 Cloud enforces API rate limits to ensure system stability:

On-premise deployments may have different limits based on server capacity and configuration. Implement retry logic with exponential backoff to handle rate limit responses gracefully.

A. Yes, Sage provides several options for testing:

A. Sage 200 APIs provide detailed error responses, including:

Enable detailed logging in your integration code and monitor both application logs and Sage 200's audit trails for comprehensive troubleshooting.

A. You can use any programming language that supports HTTP requests and JSON parsing. Sage provides SDKs and examples for:

Community-contributed libraries may be available for other languages. The REST/OData API ensures broad language compatibility.

A. For large data operations:

A. Multiple support channels are available:

.webp)

Jira is one of those tools that quietly powers the backbone of how teams work—whether you're NASA tracking space-bound bugs or a startup shipping sprints on Mondays. Over 300,000 companies use it to keep projects on track, and it’s not hard to see why.

This guide is meant to help you get started with Jira’s API—especially if you’re looking to automate tasks, sync systems, or just make your project workflows smoother. Whether you're exploring an integration for the first time or looking to go deeper with use cases, we’ve tried to keep things simple, practical, and relevant.

At its core, Jira is a powerful tool for tracking issues and managing projects. The Jira API takes that one step further—it opens up everything under the hood so your systems can talk to Jira automatically.

Think of it as giving your app the ability to create tickets, update statuses, pull reports, and tweak workflows—without anyone needing to click around. Whether you're building an integration from scratch or syncing data across tools, the API is how you do it.

It’s well-documented, RESTful, and gives you access to all the key stuff: issues, projects, boards, users, workflows—you name it.

Chances are, your customers are already using Jira to manage bugs, tasks, or product sprints. By integrating with it, you let them:

It’s a win-win. Your users save time by avoiding duplicate work, and your app becomes a more valuable part of their workflow. Plus, once you set up the integration, you open the door to a ton of automation—like auto-updating statuses, triggering alerts, or even creating tasks based on events from your product.

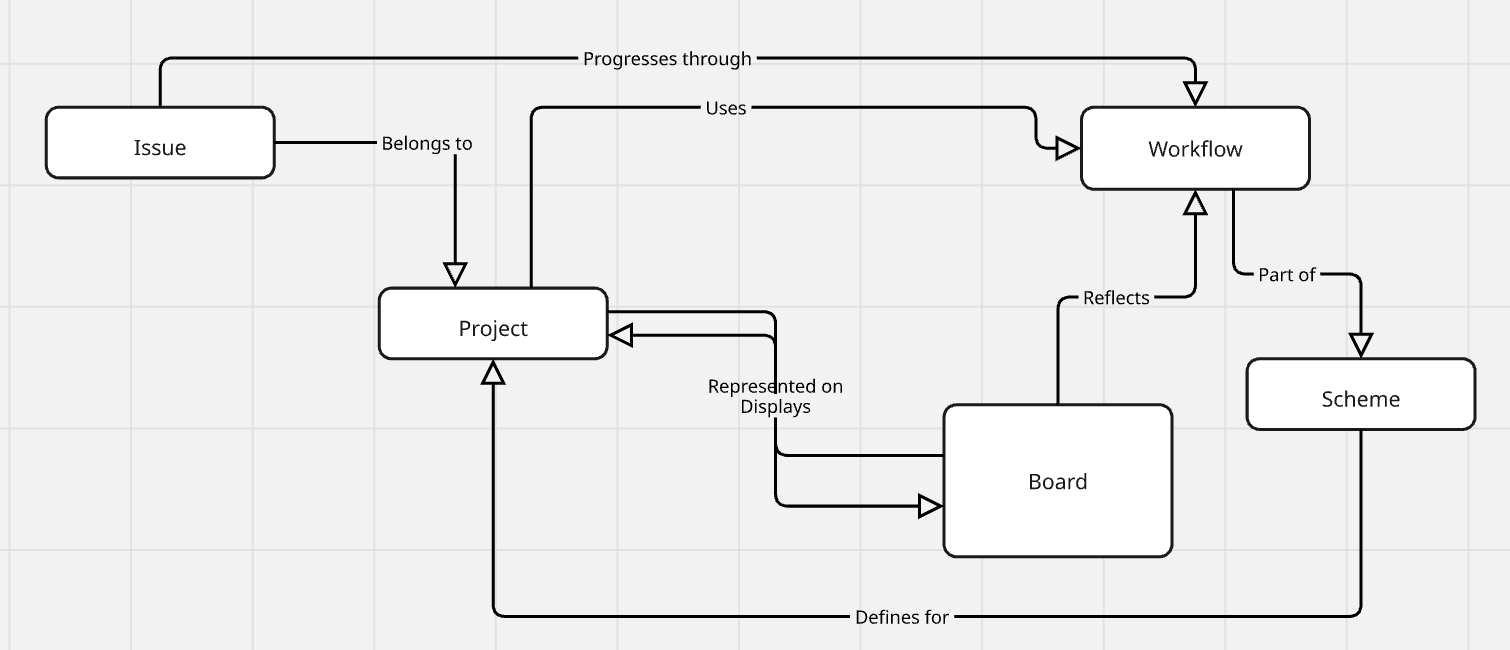

Before you dive into the API calls, it's helpful to understand how Jira is structured. Here are some basics:

Each of these maps to specific API endpoints. Knowing how they relate helps you design cleaner, more effective integrations.

To start building with the Jira API, here’s what you’ll want to have set up:

If you're using Jira Cloud, you're working with the latest API. If you're on Jira Server/Data Center, there might be a few quirks and legacy differences to account for.

Before you point anything at production, set up a test instance of Jira Cloud. It’s free to try and gives you a safe place to break things while you build.

You can:

Testing in a sandbox means fewer headaches down the line—especially when things go wrong (and they sometimes will).

The official Jira API documentation is your best friend when starting an integration. It's hosted by Atlassian and offers granular details on endpoints, request/response bodies, and error messages. Use the interactive API explorer and bookmark sections such as Authentication, Issues, and Projects to make your development process efficient.

Jira supports several different ways to authenticate API requests. Let’s break them down quickly so you can choose what fits your setup.

Basic authentication is now deprecated but may still be used for legacy systems. It consists of passing a username and password with every request. While easy, it does not have strong security features, hence the phasing out.

OAuth 1.0a has been replaced by more secure protocols. It was previously used for authorization but is now phased out due to security concerns.

For most modern Jira Cloud integrations, API tokens are your best bet. Here’s how you use them:

It’s simple, secure, and works well for most use cases.

If your app needs to access Jira on behalf of users (with their permission), you’ll want to go with 3-legged OAuth. You’ll:

It’s a bit more work upfront, but it gives you scoped, permissioned access.

If you're building apps *inside* the Atlassian ecosystem, you'll either use:

Both offer deeper integrations and more control, but require additional setup.

Whichever method you use, make sure:

A lot of issues during integration come down to misconfigured auth—so double-check before you start debugging the code.

Once you're authenticated, one of the first things you’ll want to do is start interacting with Jira issues. Here’s how to handle the basics: create, read, update, delete (aka CRUD).

To create a new issue, you’ll need to call the `POST /rest/api/3/issue` endpoint with a few required fields:

{

"fields": {

"project": { "key": "PROJ" },

"issuetype": { "name": "Bug" },

"summary": "Something’s broken!",

"description": "Details about the bug go here."

}

}At a minimum, you need the project key, issue type, and summary. The rest—like description, labels, and custom fields—are optional but useful.

Make sure to log the responses so you can debug if anything fails. And yes, retry logic helps if you hit rate limits or flaky network issues.

To fetch an issue, use a GET request:

GET /rest/api/3/issue/{issueIdOrKey}

You’ll get back a JSON object with all the juicy details: summary, description, status, assignee, comments, history, etc.

It’s pretty handy if you’re syncing with another system or building a custom dashboard.

Need to update an issue’s status, add a comment, or change the priority? Use PUT for full updates or PATCH for partial ones.

A common use case is adding a comment:

{

"body": "Following up on this issue—any updates?"

}

Make sure to avoid overwriting fields unintentionally. Always double-check what you're sending in the payload.

Deleting issues is irreversible. Only do it if you're absolutely sure—and always ensure your API token has the right permissions.

It’s best practice to:

Confirm the issue should be deleted (maybe with a soft-delete flag first)

Keep an audit trail somewhere. Handle deletion errors gracefully

Jira comes with a powerful query language called JQL (Jira Query Language) that lets you search for precise issues.

Want all open bugs assigned to a specific user? Or tasks due this week? JQL can help with that.

Example: project = PROJ AND status = "In Progress" AND assignee = currentUser()

When using the search API, don’t forget to paginate: GET /rest/api/3/search?jql=yourQuery&startAt=0&maxResults=50

This helps when you're dealing with hundreds (or thousands) of issues.

The API also allows you to create and manage Jira projects. This is especially useful for automating new customer onboarding.

Use the `POST /rest/api/3/project` endpoint to create a new project, and pass in details like the project key, name, lead, and template.

You can also update project settings and connect them to workflows, issue type schemes, and permission schemes.

If your customers use Jira for agile, you’ll want to work with boards and sprints.

Here’s what you can do with the API:

- Fetch boards (`GET /board`)

- Retrieve or create sprints

- Move issues between sprints

It helps sync sprint timelines or mirror status in an external dashboard.

Jira Workflows define how an issue moves through statuses. You can:

- Get available transitions (`GET /issue/{key}/transitions`)

- Perform a transition (`POST /issue/{key}/transitions`)

This lets you automate common flows like moving an issue to "In Review" after a pull request is merged.

Jira’s API has some nice extras that help you build smarter, more responsive integrations.

You can link related issues (like blockers or duplicates) via the API. Handy for tracking dependencies or duplicate reports across teams.

Example:

{

"type": { "name": "Blocks" },

"inwardIssue": { "key": "PROJ-101" },

"outwardIssue": { "key": "PROJ-102" }

}Always validate the link type you're using and make sure it fits your project config.

Need to upload logs, screenshots, or files? Use the attachments endpoint with a multipart/form-data request.

Just remember:

Want your app to react instantly when something changes in Jira? Webhooks are the way to go.

You can subscribe to events like issue creation, status changes, or comments. When triggered, Jira sends a JSON payload to your endpoint.

Make sure to:

Understanding the differences between Jira Cloud and Jira Server is critical:

Keep updated with the latest changes by monitoring Atlassian’s release notes and documentation.

Even with the best setup, things can (and will) go wrong. Here’s how to prepare for it.

Jira’s API gives back standard HTTP response codes. Some you’ll run into often:

Always log error responses with enough context (request, response body, endpoint) to debug quickly.

Jira Cloud has built-in rate limiting to prevent abuse. It’s not always published in detail, but here’s how to handle it safely:

If you’re building a high-throughput integration, test with realistic volumes and plan for throttling.

To make your integration fast and reliable:

These small tweaks go a long way in keeping your integration snappy and stable.

Getting visibility into your integration is just as important as writing the code. Here's how to keep things observable and testable.

Solid logging = easier debugging. Here's what to keep in mind:

If something breaks, good logs can save hours of head-scratching.

When you’re trying to figure out what’s going wrong:

Also, if your app has logs tied to user sessions or sync jobs, make those searchable by ID.

Testing your Jira integration shouldn’t be an afterthought. It keeps things reliable and easy to update.

The goal is to have confidence in every deploy—not to ship and pray.

Let’s look at a few examples of what’s possible when you put it all together:

Trigger issue creation when a bug or support request is reported:

curl --request POST \

--url 'https://your-domain.atlassian.net/rest/api/3/issue' \

--user 'email@example.com:<api_token>' \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' \

--data '{

"fields": {

"project": { "key": "PROJ" },

"issuetype": { "name": "Bug" },

"summary": "Bug in production",

"description": "A detailed bug report goes here."

}

}'Read issue data from Jira and sync it to another tool:

bash

curl -u email@example.com:API_TOKEN -X GET \ https://your-domain.atlassian.net/rest/api/3/issue/PROJ-123

Map fields like title, status, and priority, and push updates as needed.

Use a scheduled script to move overdue tasks to a "Stuck" column:

```python

import requests

import json

jira_domain = "https://your-domain.atlassian.net"

api_token = "API_TOKEN"

email = "email@example.com"

headers = {"Content-Type": "application/json"}

# Find overdue issues

jql = "project = PROJ AND due < now() AND status != 'Done'"

response = requests.get(f"{jira_domain}/rest/api/3/search",

headers=headers,

auth=(email, api_token),

params={"jql": jql})

for issue in response.json().get("issues", []):

issue_key = issue["key"]

payload = {"transition": {"id": "31"}} # Replace with correct transition ID

requests.post(f"{jira_domain}/rest/api/3/issue/{issue_key}/transitions",

headers=headers,

auth=(email, api_token),

data=json.dumps(payload))

```Automations like this can help keep boards clean and accurate.

Security's key, so let's keep it simple:

Think of API keys like passwords.

Secure secrets = less risk.

If you touch user data:

Quick tips to level up:

Libraries (Java, Python, etc.) can help with the basics.

Your call is based on your needs.

Automate testing and deployment.

Reliable integration = happy you.

If you’ve made it this far—nice work! You’ve got everything you need to build a powerful, reliable Jira integration. Whether you're syncing data, triggering workflows, or pulling reports, the Jira API opens up a ton of possibilities.

Here’s a quick checklist to recap:

Jira is constantly evolving, and so are the use cases around it. If you want to go further:

- Follow [Atlassian’s Developer Changelog]

- Explore the [Jira API Docs]

- Join the [Atlassian Developer Community]

And if you're building on top of Knit, we’re always here to help.

Drop us an email at hello@getknit.dev if you run into a use case that isn’t covered.

Happy building! 🙌

.webp)

Sage Intacct API integration allows businesses to connect financial systems with other applications, enabling real-time data synchronization and reducing errors and missed opportunities. Manual data transfers and outdated processes can lead to errors and missed opportunities. This guide explains how Sage Intacct API integration removes those pain points. We cover the technical setup, common issues, and how using Knit can cut down development time while ensuring a secure connection between your systems and Sage Intacct.

Sage Intacct API integration integrates your financial and ERP systems with third-party applications. It connects your financial information and tools used for reporting, budgeting, and analytics.

The Sage Intacct API documentation provides all the necessary information to integrate your systems with Sage Intacct’s financial services. It covers two main API protocols: REST and SOAP, each designed for different integration needs. REST is commonly used for web-based applications, offering a simple and flexible approach, while SOAP is preferred for more complex and secure transactions.

By following the guidelines, you can ensure a secure and efficient connection between your systems and Sage Intacct.

Integrating Sage Intacct with your existing systems offers a host of advantages.

Before you start the integration process, you should properly set up your environment. Proper setup creates a solid foundation and prevents most pitfalls.

A clear understanding of Sage Intacct’s account types and ecosystem is vital.

A secure environment protects your data and credentials.

Setting up authentication is crucial to secure the data flow.

An understanding of the different APIs and protocols is necessary to choose the best method for your integration needs.

Sage Intacct offers a flexible API ecosystem to fit diverse business needs.

The Sage Intacct REST API offers a clean, modern approach to integrating with Sage Intacct.

Curl request:

curl -i -X GET \ 'https://api.intacct.com/ia/api/v1/objects/cash-management/bank-acount {key}' \-H 'Authorization: Bearer <YOUR_TOKEN_HERE>'Here’s a detailed reference to all the Sage Intacct REST API Endpoints.

For environments that need robust enterprise-level integration, the Sage Intacct SOAP API is a strong option.

Each operation is a simple HTTP request. For example, a GET request to retrieve account details:

Parameters for request body:

<read>

<object>GLACCOUNT</object>

<keys>1</keys>

<fields>*</fields>

</read>Data format for the response body:

Here’s a detailed reference to all the Sage Intacct SOAP API Endpoints.

Comparing SOAP versus REST for various scenarios:

Beyond the primary REST and SOAP APIs, Sage Intacct provides other modules to enhance integration.

Now that your environment is ready and you understand the API options, you can start building your integration.

A basic API call is the foundation of your integration.

Step-by-step guide for a basic API call using REST and SOAP:

REST Example:

Example:

Curl Request:

curl -i -X GET \

https://api.intacct.com/ia/api/v1/objects/accounts-receivable/customer \

-H 'Authorization: Bearer <YOUR_TOKEN_HERE>'

Response 200 (Success):

{

"ia::result": [

{

"key": "68",

"id": "CUST-100",

"href": "/objects/accounts-receivable/customer/68"

},

{

"key": "69",

"id": "CUST-200",

"href": "/objects/accounts-receivable/customer/69"

},

{

"key": "73",

"id": "CUST-300",

"href": "/objects/accounts-receivable/customer/73"

}

],

"ia::meta": {

"totalCount": 3,

"start": 1,

"pageSize": 100

}

}

Response 400 (Failure):

{

"ia::result": {

"ia::error": {

"code": "invalidRequest",

"message": "A POST request requires a payload",

"errorId": "REST-1028",

"additionalInfo": {

"messageId": "IA.REQUEST_REQUIRES_A_PAYLOAD",

"placeholders": {

"OPERATION": "POST"

},

"propertySet": {}

},

"supportId": "Kxi78%7EZuyXBDEGVHD2UmO1phYXDQAAAAo"

}

},

"ia::meta": {

"totalCount": 1,

"totalSuccess": 0,

"totalError": 1

}

}

SOAP Example:

Example snippet of creating a reporting period:

<create>

<REPORTINGPERIOD>

<NAME>Month Ended January 2017</NAME>

<HEADER1>Month Ended</HEADER1>

<HEADER2>January 2017</HEADER2>

<START_DATE>01/01/2017</START_DATE>

<END_DATE>01/31/2017</END_DATE>

<BUDGETING>true</BUDGETING>

<STATUS>active</STATUS>

</REPORTINGPERIOD>

</create>Using Postman for Testing and Debugging API Calls

Postman is a good tool for sending and confirming API requests before implementation to make the testing of your Sage Intacct API integration more efficient.

You can import the Sage Intacct Postman collection into your Postman tool, which has pre-configured endpoints for simple testing. You can use it to simply test your API calls, see results in real time, and debug any issues.

This helps in debugging by visualizing responses and simplifying the identification of errors.

Mapping your business processes to API workflows makes integration smoother.

To test your Sage Intacct API integration, using Postman is recommended. You can import the Sage Intacct Postman collection and quickly make sample API requests to verify functionality. This allows for efficient testing before you begin full implementation.

Understanding real-world applications helps in visualizing the benefits of a well-implemented integration.

This section outlines examples from various sectors that have seen success with Sage Intacct integrations.

Industry

Joining a sage intacct partnership program can offer additional resources and support for your integration efforts.

The partnership program enhances your integration by offering technical and marketing support.

Different partnership tiers cater to varied business needs.

Following best practices ensures that your integration runs smoothly over time.

Manage API calls effectively to handle growth.

Security must remain a top priority.

Effective monitoring helps catch issues early.

No integration is without its challenges. This section covers common problems and how to fix them.

Prepare for and resolve typical issues quickly.

Effective troubleshooting minimizes downtime.

Long-term management of your integration is key to ongoing success.

Stay informed about changes to avoid surprises.

Ensure your integration remains robust as your business grows.

Knit offers a streamlined approach to integrating Sage Intacct. This section details how Knit simplifies the process.

Knit reduces the heavy lifting in integration tasks by offering pre-built accounting connectors in its Unified Accounting API.

This section provides a walk-through for integrating using Knit.

A sample table for mapping objects and fields can be included:

Knit eliminates many of the hassles associated with manual integration.

In this guide, we have walked you through the steps and best practices for integrating Sage Intacct via API. You have learned how to set up a secure environment, choose the right API option, map business processes, and overcome common challenges.

If you're ready to link Sage Intacct with your systems without the need for manual integration, it's time to discover how Knit can assist. Knit delivers customized, secure connectors and a simple interface that shortens development time and keeps maintenance low. Book a demo with Knit today to see firsthand how our solution addresses your integration challenges so you can focus on growing your business rather than worrying about technical roadblocks

.png)

In today's AI-driven world, AI agents have become transformative tools, capable of executing tasks with unparalleled speed, precision, and adaptability. From automating mundane processes to providing hyper-personalized customer experiences, these agents are reshaping the way businesses function and how users engage with technology. However, their true potential lies beyond standalone functionalities—they thrive when integrated seamlessly with diverse systems, data sources, and applications.

This integration is not merely about connectivity; it’s about enabling AI agents to access, process, and act on real-time information across complex environments. Whether pulling data from enterprise CRMs, analyzing unstructured documents, or triggering workflows in third-party platforms, integration equips AI agents to become more context-aware, action-oriented, and capable of delivering measurable value.

This article explores how seamless integrations unlock the full potential of AI agents, the best practices to ensure success, and the challenges that organizations must overcome to achieve seamless and impactful integration.

The rise of Artificial Intelligence (AI) agents marks a transformative shift in how we interact with technology. AI agents are intelligent software entities capable of performing tasks autonomously, mimicking human behavior, and adapting to new scenarios without explicit human intervention. From chatbots resolving customer queries to sophisticated virtual assistants managing complex workflows, these agents are becoming integral across industries.

This rise of use of AI agents has been attributed to factors like:

AI agents are more than just software programs; they are intelligent systems capable of executing tasks autonomously by mimicking human-like reasoning, learning, and adaptability. Their functionality is built on two foundational pillars:

For optimal performance, AI agents require deep contextual understanding. This extends beyond familiarity with a product or service to include insights into customer pain points, historical interactions, and updates in knowledge. However, to equip AI agents with this contextual knowledge, it is important to provide them access to a centralized knowledge base or data lake, often scattered across multiple systems, applications, and formats. This ensures they are working with the most relevant and up-to-date information. Furthermore, they need access to all new information, such as product updates, evolving customer requirements, or changes in business processes, ensuring that their outputs remain relevant and accurate.

For instance, an AI agent assisting a sales team must have access to CRM data, historical conversations, pricing details, and product catalogs to provide actionable insights during a customer interaction.

AI agents’ value lies not only in their ability to comprehend but also to act. For instance, AI agents can perform activities such as updating CRM records after a sales call, generating invoices, or creating tasks in project management tools based on user input or triggers. Similarly, AI agents can initiate complex workflows, such as escalating support tickets, scheduling appointments, or launching marketing campaigns. However, this requires seamless connectivity across different applications to facilitate action.

For example, an AI agent managing customer support could resolve queries by pulling answers from a knowledge base and, if necessary, escalating unresolved issues to a human representative with full context.

The capabilities of AI agents are undeniably remarkable. However, their true potential can only be realized when they seamlessly access contextual knowledge and take informed actions across a wide array of applications. This is where integrations play a pivotal role, serving as the key to bridging gaps and unlocking the full power of AI agents.

The effectiveness of an AI agent is directly tied to its ability to access and utilize data stored across diverse platforms. This is where integrations shine, acting as conduits that connect the AI agent to the wealth of information scattered across different systems. These data sources fall into several broad categories, each contributing uniquely to the agent's capabilities:

Platforms like databases, Customer Relationship Management (CRM) systems (e.g., Salesforce, HubSpot), and Enterprise Resource Planning (ERP) tools house structured data—clean, organized, and easily queryable. For example, CRM integrations allow AI agents to retrieve customer contact details, sales pipelines, and interaction histories, which they can use to personalize customer interactions or automate follow-ups.

The majority of organizational knowledge exists in unstructured formats, such as PDFs, Word documents, emails, and collaborative platforms like Notion or Confluence. Cloud storage systems like Google Drive and Dropbox add another layer of complexity, storing files without predefined schemas. Integrating with these systems allows AI agents to extract key insights from meeting notes, onboarding manuals, or research reports. For instance, an AI assistant integrated with Google Drive could retrieve and summarize a company’s annual performance review stored in a PDF document.

Real-time data streams from IoT devices, analytics tools, or social media platforms offer actionable insights that are constantly updated. AI agents integrated with streaming data sources can monitor metrics, such as energy usage from IoT sensors or engagement rates from Twitter analytics, and make recommendations or trigger actions based on live updates.

APIs from third-party services like payment gateways (Stripe, PayPal), logistics platforms (DHL, FedEx), and HR systems (BambooHR, Workday) expand the agent's ability to act across verticals. For example, an AI agent integrated with a payment gateway could automatically reconcile invoices, track payments, and even issue alerts for overdue accounts.

To process this vast array of data, AI agents rely on data ingestion—the process of collecting, aggregating, and transforming raw data into a usable format. Data ingestion pipelines ensure that the agent has access to a broad and rich understanding of the information landscape, enhancing its ability to make accurate decisions.

However, this capability requires robust integrations with a wide variety of third-party applications. Whether it's CRM systems, analytics tools, or knowledge repositories, each integration provides an additional layer of context that the agent can leverage.

Without these integrations, AI agents would be confined to static or siloed information, limiting their ability to adapt to dynamic environments. For example, an AI-powered customer service bot lacking integration with an order management system might struggle to provide real-time updates on a customer’s order status, resulting in a frustrating user experience.

In many applications, the true value of AI agents lies in their ability to respond with real-time or near-real-time accuracy. Integrations with webhooks and streaming APIs enable the agent to access live data updates, ensuring that its responses remain relevant and timely.

Consider a scenario where an AI-powered invoicing assistant is tasked with generating invoices based on software usage. If the agent relies on a delayed data sync, it might fail to account for a client’s excess usage in the final moments before the invoice is generated. This oversight could result in inaccurate billing, financial discrepancies, and strained customer relationships.

Integrations are not merely a way to access data for AI agents; they are critical to enabling these agents to take meaningful actions on behalf of other applications. This capability is what transforms AI agents from passive data collectors into active participants in business processes.

Integrations play a crucial role in this process by connecting AI agents with different applications, enabling them to interact seamlessly and perform tasks on behalf of the user to trigger responses, updates, or actions in real time.

For instance, A customer service AI agent integrated with CRM platforms can automatically update customer records, initiate follow-up emails, and even generate reports based on the latest customer interactions. SImilarly, if a popular product is running low, the AI agent for e-commerce platform can automatically reorder from the supplier, update the website’s product page with new availability dates, and notify customers about upcoming restocks. Furthermore, A marketing AI agent integrated with CRM and marketing automation platforms (e.g., Mailchimp, ActiveCampaign) can automate email campaigns based on customer behaviors—such as opening specific emails, clicking on links, or making purchases.

Integrations allow AI agents to automate processes that span across different systems. For example, an AI agent integrated with a project management tool and a communication platform can automate task assignments based on project milestones, notify team members of updates, and adjust timelines based on real-time data from work management systems.

For developers driving these integrations, it’s essential to build robust APIs and use standardized protocols like OAuth for secure data access across each of the applications in use. They should also focus on real-time synchronization to ensure the AI agent acts on the most current data available. Proper error handling, logging, and monitoring mechanisms are critical to maintaining reliability and performance across integrations. Furthermore, as AI agents often interact with multiple platforms, developers should design integration solutions that can scale. This involves using scalable data storage solutions, optimizing data flow, and regularly testing integration performance under load.

Retrieval-Augmented Generation (RAG) is a transformative approach that enhances the capabilities of AI agents by addressing a fundamental limitation of generative AI models: reliance on static, pre-trained knowledge. RAG fills this gap by providing a way for AI agents to efficiently access, interpret, and utilize information from a variety of data sources. Here’s how iintegrations help in building RAG pipelines for AI agents:

Traditional APIs are optimized for structured data (like databases, CRMs, and spreadsheets). However, many of the most valuable insights for AI agents come from unstructured data—documents (PDFs), emails, chats, meeting notes, Notion, and more. Unstructured data often contains detailed, nuanced information that is not easily captured in structured formats.

RAG enables AI agents to access and leverage this wealth of unstructured data by integrating it into their decision-making processes. By integrating with these unstructured data sources, AI agents:

RAG involves not only the retrieval of relevant data from these sources but also the generation of responses based on this data. It allows AI agents to pull in information from different platforms, consolidate it, and generate responses that are contextually relevant.

For instance, an HR AI agent might need to pull data from employee records, performance reviews, and onboarding documents to answer a question about benefits. RAG enables this agent to access the necessary context and background information from multiple sources, ensuring the response is accurate and comprehensive through a single retrieval mechanism.

RAG empowers AI agents by providing real-time access to updated information from across various platforms with the help of Webhooks. This is critical for applications like customer service, where responses must be based on the latest data.

For example, if a customer asks about their recent order status, the AI agent can access real-time shipping data from a logistics platform, order history from an e-commerce system, and promotional notes from a marketing database—enabling it to provide a response with the latest information. Without RAG, the agent might only be able to provide a generic answer based on static data, leading to inaccuracies and customer frustration.

While RAG presents immense opportunities to enhance AI capabilities, its implementation comes with a set of challenges. Addressing these challenges is crucial to building efficient, scalable, and reliable AI systems.

Integration of an AI-powered customer service agent with CRM systems, ticketing platforms, and other tools can help enhance contextual knowledge and take proactive actions, delivering a superior customer experience.

For instance, when a customer reaches out with a query—such as a delayed order—the AI agent retrieves their profile from the CRM, including past interactions, order history, and loyalty status, to gain a comprehensive understanding of their background. Simultaneously, it queries the ticketing system to identify any related past or ongoing issues and checks the order management system for real-time updates on the order status. Combining this data, the AI develops a holistic view of the situation and crafts a personalized response. It may empathize with the customer’s frustration, offer an estimated delivery timeline, provide goodwill gestures like loyalty points or discounts, and prioritize the order for expedited delivery.

The AI agent also performs critical backend tasks to maintain consistency across systems. It logs the interaction details in the CRM, updating the customer’s profile with notes on the resolution and any loyalty rewards granted. The ticketing system is updated with a resolution summary, relevant tags, and any necessary escalation details. Simultaneously, the order management system reflects the updated delivery status, and insights from the resolution are fed into the knowledge base to improve responses to similar queries in the future. Furthermore, the AI captures performance metrics, such as resolution times and sentiment analysis, which are pushed into analytics tools for tracking and reporting.

In retail, AI agents can integrate with inventory management systems, customer loyalty platforms, and marketing automation tools for enhancing customer experience and operational efficiency. For instance, when a customer purchases a product online, the AI agent quickly retrieves data from the inventory management system to check stock levels. It can then update the order status in real time, ensuring that the customer is informed about the availability and expected delivery date of the product. If the product is out of stock, the AI agent can suggest alternatives that are similar in features, quality, or price, or provide an estimated restocking date to prevent customer frustration and offer a solution that meets their needs.

Similarly, if a customer frequently purchases similar items, the AI might note this and suggest additional products or promotions related to these interests in future communications. By integrating with marketing automation tools, the AI agent can personalize marketing campaigns, sending targeted emails, SMS messages, or notifications with relevant offers, discounts, or recommendations based on the customer’s previous interactions and buying behaviors. The AI agent also writes back data to customer profiles within the CRM system. It logs details such as purchase history, preferences, and behavioral insights, allowing retailers to gain a deeper understanding of their customers’ shopping patterns and preferences.

Integrating AI (Artificial Intelligence) and RAG (Recommendations, Actions, and Goals) frameworks into existing systems is crucial for leveraging their full potential, but it introduces significant technical challenges that organizations must navigate. These challenges span across data ingestion, system compatibility, and scalability, often requiring specialized technical solutions and ongoing management to ensure successful implementation.

Adding integrations to AI agents involves providing these agents with the ability to seamlessly connect with external systems, APIs, or services, allowing them to access, exchange, and act on data. Here are the top ways to achieve the same:

Custom development involves creating tailored integrations from scratch to connect the AI agent with various external systems. This method requires in-depth knowledge of APIs, data models, and custom logic. The process involves developing specific integrations to meet unique business requirements, ensuring complete control over data flows, transformations, and error handling. This approach is suitable for complex use cases where pre-built solutions may not suffice.

Embedded iPaaS (Integration Platform as a Service) solutions offer pre-built integration platforms that include no-code or low-code tools. These platforms allow organizations to quickly and easily set up integrations between the AI agent and various external systems without needing deep technical expertise. The integration process is simplified by using a graphical interface to configure workflows and data mappings, reducing development time and resource requirements.

Unified API solutions provide a single API endpoint that connects to multiple SaaS products and external systems, simplifying the integration process. This method abstracts the complexity of dealing with multiple APIs by consolidating them into a unified interface. It allows the AI agent to access a wide range of services, such as CRM systems, marketing platforms, and data analytics tools, through a seamless and standardized integration process.

Knit offers a game-changing solution for organizations looking to integrate their AI agents with a wide variety of SaaS applications quickly and efficiently. By providing a seamless, AI-driven integration process, Knit empowers businesses to unlock the full potential of their AI agents by connecting them with the necessary tools and data sources.

By integrating with Knit, organizations can power their AI agents to interact seamlessly with a wide array of applications. This capability not only enhances productivity and operational efficiency but also allows for the creation of innovative use cases that would be difficult to achieve with manual integration processes. Knit thus transforms how businesses utilize AI agents, making it easier to harness the full power of their data across multiple platforms.

Ready to see how Knit can transform your AI agents? Contact us today for a personalized demo!

.png)

In today’s fast-paced digital landscape, organizations across all industries are leveraging Calendar APIs to streamline scheduling, automate workflows, and optimize resource management. While standalone calendar applications have always been essential, Calendar Integration significantly amplifies their value—making it possible to synchronize events, reminders, and tasks across multiple platforms seamlessly. Whether you’re a SaaS provider integrating a customer’s calendar or an enterprise automating internal processes, a robust API Calendar strategy can drastically enhance efficiency and user satisfaction.

Explore more Calendar API integrations

In this comprehensive guide, we’ll discuss the benefits of Calendar API integration, best practices for developers, real-world use cases, and tips for managing common challenges like time zone discrepancies and data normalization. By the end, you’ll have a clear roadmap on how to build and maintain effective Calendar APIs for your organization or product offering in 2025.

In 2025, calendars have evolved beyond simple day-planners to become strategic tools that connect individuals, teams, and entire organizations. The real power comes from Calendar Integration, or the ability to synchronize these planning tools with other critical systems—CRM software, HRIS platforms, applicant tracking systems (ATS), eSignature solutions, and more.

Essentially, Calendar API integration becomes indispensable for any software looking to reduce operational overhead, improve user satisfaction, and scale globally.

One of the most notable advantages of Calendar Integration is automated scheduling. Instead of manually entering data into multiple calendars, an API can do it for you. For instance, an event management platform integrating with Google Calendar or Microsoft Outlook can immediately update participants’ schedules once an event is booked. This eliminates the need for separate email confirmations and reduces human error.

When a user can book or reschedule an appointment without back-and-forth emails, you’ve substantially upgraded their experience. For example, healthcare providers that leverage Calendar APIs can let patients pick available slots and sync these appointments directly to both the patient’s and the doctor’s calendars. Changes on either side trigger instant notifications, drastically simplifying patient-doctor communication.

By aligning calendars with HR systems, CRM tools, and project management platforms, businesses can ensure every resource—personnel, rooms, or equipment—is allocated efficiently. Calendar-based resource mapping can reduce double-bookings and idle times, increasing productivity while minimizing conflicts.

Notifications are integral to preventing missed meetings and last-minute confusion. Whether you run a field service company, a professional consulting firm, or a sales organization, instant schedule updates via Calendar APIs keep everyone on the same page—literally.

API Calendar solutions enable triggers and actions across diverse systems. For instance, when a sales lead in your CRM hits “hot” status, the system can automatically schedule a follow-up call, add it to the rep’s calendar, and send a reminder 15 minutes before the meeting. Such automation fosters a frictionless user experience and supports consistent follow-ups.

<a name="calendar-api-data-models-explained"></a>

To integrate calendar functionalities successfully, a solid grasp of the underlying data structures is crucial. While each calendar provider may have specific fields, the broad data model often consists of the following objects:

Properly mapping these objects during Calendar Integration ensures consistent data handling across multiple systems. Handling each element correctly—particularly with recurring events—lays the foundation for a smooth user experience.

Below are several well-known Calendar APIs that dominate the market. Each has unique features, so choose based on your users’ needs:

Applicant Tracking Systems (ATS) like Lever or Greenhouse can integrate with Google Calendar or Outlook to automate interview scheduling. Once a candidate is selected for an interview, the ATS checks availability for both the interviewer and candidate, auto-generates an event, and sends reminders. This reduces manual coordination, preventing double-bookings and ensuring a smooth interview process.

Learn more on How Interview Scheduling Companies Can Scale ATS Integrations Faster

ERPs like SAP or Oracle NetSuite handle complex scheduling needs for workforce or equipment management. By integrating with each user’s calendar, the ERP can dynamically allocate resources based on real-time availability and location, significantly reducing conflicts and idle times.

Salesforce and HubSpot CRMs can automatically book demos and follow-up calls. Once a customer selects a time slot, the CRM updates the rep’s calendar, triggers reminders, and logs the meeting details—keeping the sales cycle organized and on track.

Systems like Workday and BambooHR use Calendar APIs to automate onboarding schedules—adding orientation, training sessions, and check-ins to a new hire’s calendar. Managers can see progress in real-time, ensuring a structured, transparent onboarding experience.

Assessment tools like HackerRank or Codility integrate with Calendar APIs to plan coding tests. Once a test is scheduled, both candidates and recruiters receive real-time updates. After completion, debrief meetings are auto-booked based on availability.

DocuSign or Adobe Sign can create calendar reminders for upcoming document deadlines. If multiple signatures are required, it schedules follow-up reminders, ensuring legal or financial processes move along without hiccups.

QuickBooks or Xero integrations place invoice due dates and tax deadlines directly onto the user’s calendar, complete with reminders. Users avoid late penalties and maintain financial compliance with minimal manual effort.

While Calendar Integration can transform workflows, it’s not without its hurdles. Here are the most prevalent obstacles:

Businesses can integrate Calendar APIs either by building direct connectors for each calendar platform or opting for a Unified Calendar API provider that consolidates all integrations behind a single endpoint. Here’s how they compare:

Learn more about what should you look for in a Unified API Platform

The calendar landscape is only getting more complex as businesses and end users embrace an ever-growing range of tools and platforms. Implementing an effective Calendar API strategy—whether through direct connectors or a unified platform—can yield substantial operational efficiencies, improved user satisfaction, and a significant competitive edge. From Calendar APIs that power real-time notifications to AI-driven features predicting best meeting times, the potential for innovation is limitless.

If you’re looking to add API Calendar capabilities to your product or optimize an existing integration, now is the time to take action. Start by assessing your users’ needs, identifying top calendar providers they rely on, and determining whether a unified or direct connector strategy makes the most sense. Incorporate the best practices highlighted in this guide—like leveraging webhooks, managing data normalization, and handling rate limits—and you’ll be well on your way to delivering a next-level calendar experience.

Ready to transform your Calendar Integration journey?

Book a Demo with Knit to See How AI-Driven Unified APIs Simplify Integrations

By following the strategies in this comprehensive guide, you’ll not only harness the power of Calendar APIs but also future-proof your software or enterprise operations for the decade ahead. Whether you’re automating interviews, scheduling field services, or synchronizing resources across continents, Calendar Integration is the key to eliminating complexity and turning time management into a strategic asset.

.webp)

This guide is part of our growing collection on HRIS integrations. We’re continuously exploring new apps and updating our HRIS Guides Directory with fresh insights.

Workday has become one of the most trusted platforms for enterprise HR, payroll, and financial management. It’s the system of record for employee data in thousands of organizations. But as powerful as Workday is, most businesses don’t run only on Workday. They also use performance management tools, applicant tracking systems, payroll software, CRMs, SaaS platforms, and more.

The challenge? Making all these systems talk to each other.

That’s where the Workday API comes in. By integrating with Workday’s APIs, companies can automate processes, reduce manual work, and ensure accurate, real-time data flows between systems.

In this blog, we’ll give you everything you need, whether you’re a beginner just learning about APIs or a developer looking to build an enterprise-grade integration.

We’ll cover terminology, use cases, step-by-step setup, code examples, and FAQs. By the end, you’ll know how Workday API integration works and how to do it the right way.

Looking to quickstart with the Workday API Integration? Check our Workday API Directory for common Workday API endpoints

Workday integrations can support both internal workflows for your HR and finance teams, as well as customer-facing use cases that make SaaS products more valuable. Let’s break down some of the most impactful examples.

Performance reviews are key to fair salary adjustments, promotions, and bonus payouts. Many organizations use tools like Lattice to manage reviews and feedback, but without accurate employee data, the process can become messy.

By integrating Lattice with Workday, job titles and salaries stay synced and up to date. HR teams can run performance cycles with confidence, and once reviews are done, compensation changes flow back into Workday automatically — keeping both systems aligned and reducing manual work.

Onboarding new employees is often a race against time , from getting payroll details set up to preparing IT access. With Workday, you can automate this process.

For example, by integrating an ATS like Greenhouse with Workday:

For SaaS companies, onboarding users efficiently is key to customer satisfaction. Workday integrations make this scalable.

Take BILL, a financial operations platform, as an example:

Offboarding is just as important as onboarding, especially for maintaining security. If a terminated employee retains access to systems, it creates serious risks.

Platforms like Ramp, a spend management solution, solve this through Workday integrations:

While this guide equips developers with the skills to build robust Workday integrations through clear explanations and practical examples, the benefits extend beyond the development team. You can also expand your HRIS integrations with the Workday API integration and automate tedious tasks like data entry, freeing up valuable time to focus on other important work. Business leaders gain access to real-time insights across their entire organization, empowering them to make data-driven decisions that drive growth and profitability. This guide empowers developers to build integrations that streamline HR workflows, unlock real-time data for leaders, and ultimately unlock Workday's full potential for your organization.

Understanding key terms is essential for effective integration with Workday. Let’s look upon few of them, that will be frequently used ahead -

1. API Types: Workday offers REST and SOAP APIs, which serve different purposes. REST APIs are commonly used for web-based integrations, while SOAP APIs are often utilized for complex transactions.

2. Endpoint Structure: You must familiarize yourself with the Workday API structure as each endpoint corresponds to a specific function. A common workday API example would be retrieving employee data or updating payroll information.

3. API Documentation: Workday API documentation provides a comprehensive overview of both REST and SOAP APIs.

Workday supports two primary ways to authenticate API calls. Which one you use depends on the API family you choose:

SOAP requests are authenticated with a special Workday user account (the ISU) using WS-Security headers. Access is controlled by the security group(s) and domain policies assigned to that ISU.

REST requests use OAuth 2.0. You register an API client in Workday, grant scopes (what the client is allowed to access), and obtain access tokens (and a refresh token) to call endpoints.

To ensure a secure and reliable connection with Workday's APIs, this section outlines the essential prerequisites. These steps will lay the groundwork for a successful integration, enabling seamless data exchange and unlocking the full potential of Workday within your existing technological infrastructure.

Now that you have a comprehensive overview of the steps required to build a Workday API Integration and an overview of the Workday API documentation, lets dive deep into each step so you can build your Workday integration confidently!

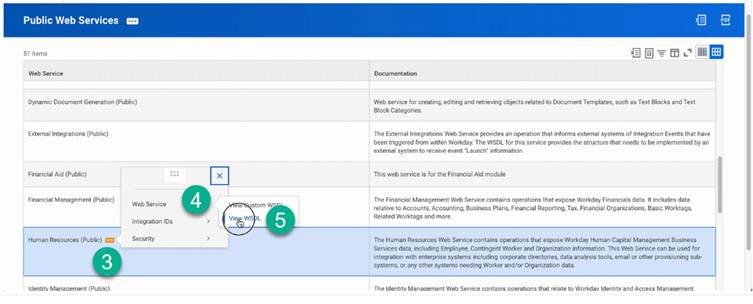

The Web Services Endpoint for the Workday tenant serves as the gateway for integrating external systems with Workday's APIs, enabling data exchange and communication between platforms. To access your specific Workday web services endpoint, follow these steps:

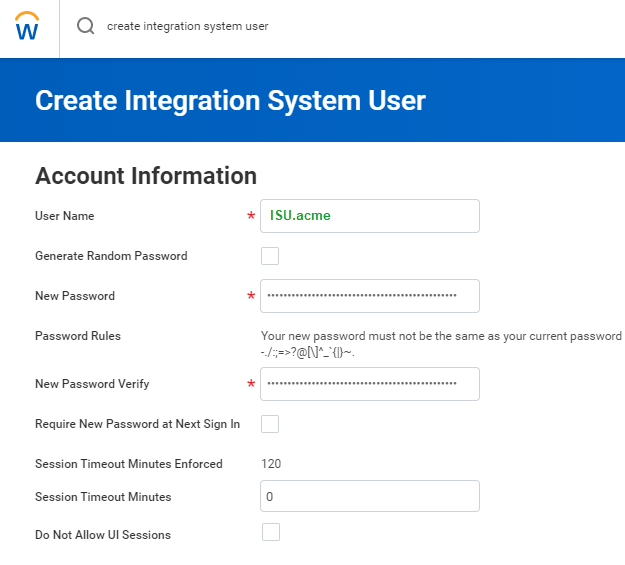

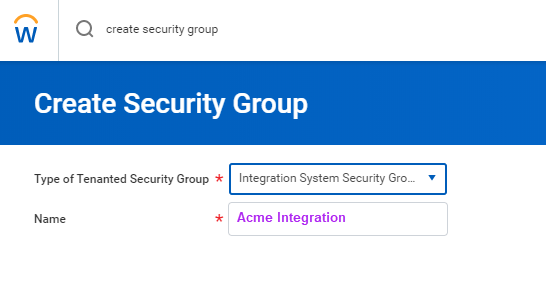

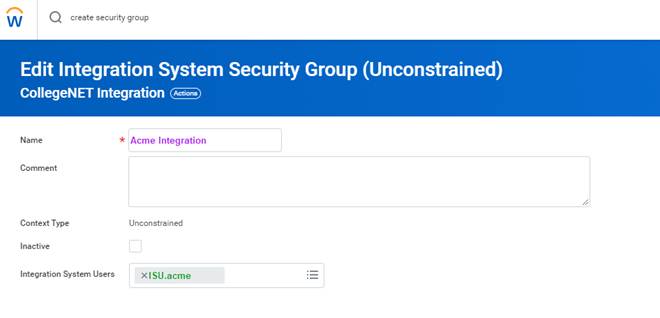

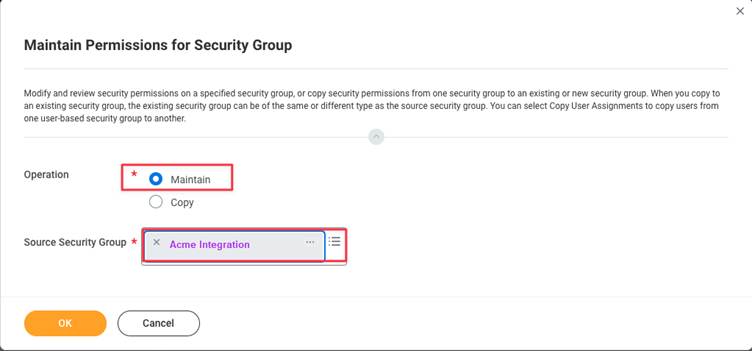

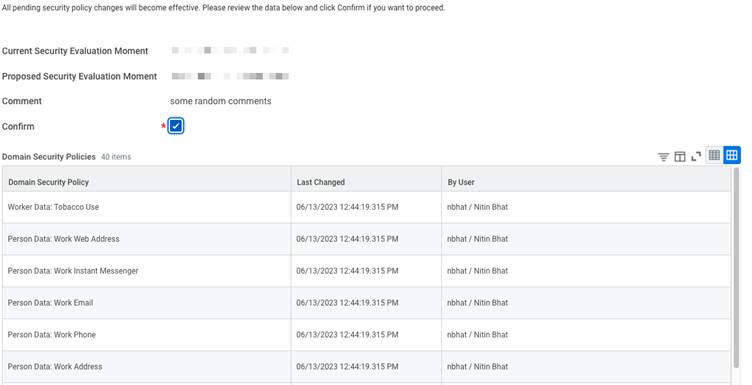

Next, you need to establish an Integration System User (ISU) in Workday, dedicated to managing API requests. This ensures enhanced security and enables better tracking of integration actions. Follow the below steps to set up an ISU in Workday:

Note: The permissions listed below are necessary for the full HRIS API. These permissions may vary depending on the specific implementation

Parent Domains for HRIS

Parent Domains for HRIS

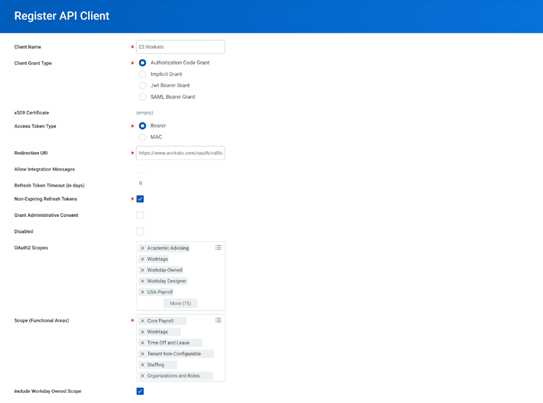

Workday offers different authentication methods. Here, we will focus on OAuth 2.0, a secure way for applications to gain access through an ISU (Integrated System User). An ISU acts like a dedicated user account for your integration, eliminating the need to share individual user credentials. Below steps highlight how to obtain OAuth 2.0 tokens in Workday:

When building a Workday integration, one of the first decisions you’ll face is: Should I use SOAP or REST?

Both are supported by Workday, but they serve slightly different purposes. Let’s break it down.

SOAP (Simple Object Access Protocol) has been around for years and is still widely used in Workday, especially for sensitive data and complex transactions.

How to work with SOAP:

REST (Representational State Transfer) is the newer, lighter, and easier option for Workday integrations. It’s widely used in SaaS products and web apps.

Advantages of REST APIs

How to work with REST:

Now that you have picked between SOAP and REST, let's proceed to utilize Workday HCM APIs effectively. We'll walk through creating a new employee and fetching a list of all employees – essential building blocks for your integration. Remember, if you are using SOAP, you will authenticate your requests with an ISU user name and password, while if your are using REST, you will authenticate your requests with access tokens generated by using the OAuth refresh tokens we generated in the above steps.

In this guide, we will focus on using SOAP to construct our API requests.

First let's learn about constructing a SOAP Request Body

SOAP requests follow a specific format and use XML to structure the data. Here's an example of a SOAP request body to fetch employees using the Get Workers endpoint:

<soapenv:Envelope

xmlns:soapenv="http://schemas.xmlsoap.org/soap/envelope/"

xmlns:bsvc="urn:com.workday/bsvc">

<soapenv:Header>

<wsse:Security>

<wsse:UsernameToken>

<wsse:Username>{ISU USERNAME}</wsse:Username>

<wsse:Password>{ISU PASSWORD}</wsse:Password>

</wsse:UsernameToken>

</wsse:Security>

</soapenv:Header>

<soapenv:Body>

<bsvc:Get_Workers_Request xmlns:bsvc="urn:com.workday/bsvc" bsvc:version="v40.1">

</bsvc:Get_Workers_Request>

</soapenv:Body>

</soapenv:Envelope>👉 How it works:

Now that you know how to construct a SOAP request, let's look at a couple of real life Workday integration use cases:

Let's add a new team member. For this we will use the Hire Employee API! It lets you send employee details like name, job title, and salary to Workday. Here's a breakdown:

curl --location 'https://wd2-impl-services1.workday.com/ccx/service/{TENANT}/Staffing/v42.0' \

--header 'Content-Type: application/xml' \

--data-raw '<soapenv:Envelope xmlns:bsvc="urn:com.workday/bsvc" xmlns:soapenv="http://schemas.xmlsoap.org/soap/envelope/">

<soapenv:Header>

<wsse:Security>

<wsse:UsernameToken>

<wsse:Username>{ISU_USERNAME}</wsse:Username>

<wsse:Password>{ISU_PASSWORD}</wsse:Password>

</wsse:UsernameToken>

</wsse:Security>

<bsvc:Workday_Common_Header>

<bsvc:Include_Reference_Descriptors_In_Response>true</bsvc:Include_Reference_Descriptors_In_Response>

</bsvc:Workday_Common_Header>

</soapenv:Header>

<soapenv:Body>

<bsvc:Hire_Employee_Request bsvc:version="v42.0">

<bsvc:Business_Process_Parameters>

<bsvc:Auto_Complete>true</bsvc:Auto_Complete>

<bsvc:Run_Now>true</bsvc:Run_Now>

</bsvc:Business_Process_Parameters>

<bsvc:Hire_Employee_Data>

<bsvc:Applicant_Data>

<bsvc:Personal_Data>

<bsvc:Name_Data>

<bsvc:Legal_Name_Data>

<bsvc:Name_Detail_Data>

<bsvc:Country_Reference>

<bsvc:ID bsvc:type="ISO_3166-1_Alpha-3_Code">USA</bsvc:ID>

</bsvc:Country_Reference>

<bsvc:First_Name>Employee</bsvc:First_Name>

<bsvc:Last_Name>New</bsvc:Last_Name>

</bsvc:Name_Detail_Data>

</bsvc:Legal_Name_Data>

</bsvc:Name_Data>

<bsvc:Contact_Data>

<bsvc:Email_Address_Data bsvc:Delete="false" bsvc:Do_Not_Replace_All="true">

<bsvc:Email_Address>employee@work.com</bsvc:Email_Address>

<bsvc:Usage_Data bsvc:Public="true">

<bsvc:Type_Data bsvc:Primary="true">

<bsvc:Type_Reference>

<bsvc:ID bsvc:type="Communication_Usage_Type_ID">WORK</bsvc:ID>

</bsvc:Type_Reference>

</bsvc:Type_Data>

</bsvc:Usage_Data>

</bsvc:Email_Address_Data>

</bsvc:Contact_Data>

</bsvc:Personal_Data>

</bsvc:Applicant_Data>

<bsvc:Position_Reference>

<bsvc:ID bsvc:type="Position_ID">P-SDE</bsvc:ID>

</bsvc:Position_Reference>

<bsvc:Hire_Date>2024-04-27Z</bsvc:Hire_Date>

</bsvc:Hire_Employee_Data>

</bsvc:Hire_Employee_Request>

</soapenv:Body>

</soapenv:Envelope>'Elaboration:

Response:

<bsvc:Hire_Employee_Event_Response

xmlns:bsvc="urn:com.workday/bsvc" bsvc:version="string">

<bsvc:Employee_Reference bsvc:Descriptor="string">

<bsvc:ID bsvc:type="ID">EMP123</bsvc:ID>

</bsvc:Employee_Reference>

</bsvc:Hire_Employee_Event_Response>If everything goes well, you'll get a success message and the ID of the newly created employee!

Now, if you want to grab a list of all your existing employees. The Get Workers API is your friend!

Below is workday API get workers example:

curl --location 'https://wd2-impl-services1.workday.com/ccx/service/{TENANT}/Human_Resources/v40.1' \

--header 'Content-Type: application/xml' \

--data '<soapenv:Envelope

xmlns:soapenv="http://schemas.xmlsoap.org/soap/envelope/"

xmlns:bsvc="urn:com.workday/bsvc">

<soapenv:Header>

<wsse:Security>

<wsse:UsernameToken>

<wsse:Username>{ISU_USERNAME}</wsse:Username>

<wsse:Password>{ISU_USERNAME}</wsse:Password>

</wsse:UsernameToken>

</wsse:Security>

</soapenv:Header>

<soapenv:Body>

<bsvc:Get_Workers_Request xmlns:bsvc="urn:com.workday/bsvc" bsvc:version="v40.1">

<bsvc:Response_Filter>

<bsvc:Count>10</bsvc:Count>

<bsvc:Page>1</bsvc:Page>

</bsvc:Response_Filter>

<bsvc:Response_Group>

<bsvc:Include_Reference>true</bsvc:Include_Reference>

<bsvc:Include_Personal_Information>true</bsvc:Include_Personal_Information>

</bsvc:Response_Group>

</bsvc:Get_Workers_Request>

</soapenv:Body>

</soapenv:Envelope>'This is a simple GET request to the Get Workers endpoint.

Elaboration:

Response:

<?xml version='1.0' encoding='UTF-8'?>

<env:Envelope xmlns:env="http://schemas.xmlsoap.org/soap/envelope/">

<env:Body>

<wd:Get_Workers_Response xmlns:wd="urn:com.workday/bsvc" wd:version="v40.1">

<wd:Response_Filter>

<wd:Page>1</wd:Page>

<wd:Count>1</wd:Count>

</wd:Response_Filter>

<wd:Response_Data>

<wd:Worker>

<wd:Worker_Data>

<wd:Worker_ID>21001</wd:Worker_ID>

<wd:User_ID>lmcneil</wd:User_ID>

<wd:Personal_Data>

<wd:Name_Data>

<wd:Legal_Name_Data>

<wd:Name_Detail_Data wd:Formatted_Name="Logan McNeil" wd:Reporting_Name="McNeil, Logan">

<wd:Country_Reference>

<wd:ID wd:type="WID">bc33aa3152ec42d4995f4791a106ed09</wd:ID>

<wd:ID wd:type="ISO_3166-1_Alpha-2_Code">US</wd:ID>

<wd:ID wd:type="ISO_3166-1_Alpha-3_Code">USA</wd:ID>

<wd:ID wd:type="ISO_3166-1_Numeric-3_Code">840</wd:ID>

</wd:Country_Reference>

<wd:First_Name>Logan</wd:First_Name>

<wd:Last_Name>McNeil</wd:Last_Name>

</wd:Name_Detail_Data>

</wd:Legal_Name_Data>

</wd:Name_Data>

<wd:Contact_Data>

<wd:Address_Data wd:Effective_Date="2008-03-25" wd:Address_Format_Type="Basic" wd:Formatted_Address="42 Laurel Street&#xa;San Francisco, CA 94118&#xa;United States of America" wd:Defaulted_Business_Site_Address="0">

</wd:Address_Data>

<wd:Phone_Data wd:Area_Code="415" wd:Phone_Number_Without_Area_Code="441-7842" wd:E164_Formatted_Phone="+14154417842" wd:Workday_Traditional_Formatted_Phone="+1 (415) 441-7842" wd:National_Formatted_Phone="(415) 441-7842" wd:International_Formatted_Phone="+1 415-441-7842" wd:Tenant_Formatted_Phone="+1 (415) 441-7842">

</wd:Phone_Data>

</wd:Worker_Data>

</wd:Worker>

</wd:Response_Data>

</wd:Get_Workers_Response>

</env:Body>

</env:Envelope>This JSON array gives you details of all your employees including details like the name, email, phone number and more.

Use a tool like Postman or curl to POST this XML to your Workday endpoint.

If you used REST instead, the same “Get Workers” request would look much simpler:

curl --location 'https://{host}.workday.com/ccx/api/v1/{tenant}/workers' \

--header 'Authorization: Bearer {ACCESS_TOKEN}'Before moving your integration to production, it’s always safer to test everything in a sandbox environment. A sandbox is like a practice environment; it contains test data and behaves like production but without the risk of breaking live systems.

Here’s how to use a sandbox effectively:

Ask your Workday admin to provide you with a sandbox environment. Specify the type of sandbox you need (development, test, or preview). If you are a Knit customer on the Scale or Enterprise plan, Knit will provide you access to a Workday sandbox for integration testing.

Log in to your sandbox and configure it so it looks like your production environment. Add sample company data, roles, and permissions that match your real setup.

Just like in production, create a dedicated ISU account in the sandbox. Assign it the necessary permissions to access the required APIs.

Register your application inside the sandbox to get client credentials (Client ID & Secret). These credentials will be used for secure API calls in your test environment.

Use tools like Postman or cURL to send test requests to the sandbox. Test different scenarios (e.g., creating a worker, fetching employees, updating job info). Identify and fix errors before deploying to production.

Use Workday’s built-in logs to track API requests and responses. Look for failures, permission issues, or incorrect payloads. Fix issues in your code or configuration until everything runs smoothly.

Once your integration has been thoroughly tested in the sandbox and you’re confident that everything works smoothly, the next step is moving it to the production environment. To do this, you need to replace all sandbox details with production values. This means updating the URLs to point to your production Workday tenant and switching the ISU (Integration System User) credentials to the ones created for production use.

When your integration is live, it’s important to make sure you can track and troubleshoot it easily. Setting up detailed logging will help you capture every API request and response, making it much simpler to identify and fix issues when they occur. Alongside logging, monitoring plays a key role. By keeping track of performance metrics such as response times and error rates, you can ensure the integration continues to run smoothly and catch problems before they affect your workflows.

If you’re using Knit, you also get the advantage of built-in observability dashboards. These dashboards give you real-time visibility into your live integration, making debugging and ongoing maintenance far easier. With the right preparation and monitoring in place, moving from sandbox to production becomes a smooth and reliable process.

PECI (Payroll Effective Change Interface) lets you transmit employee data changes (like new hires, raises, or terminations) directly to your payroll provider, slashing manual work and errors. Below you will find a brief comparison of PECI and Web Services and also the steps required to setup PECI in Workday

Feature: PECI

Feature: Web Services

PECI set up steps :-